A b testing in google analytics 4 – A/B testing in Google Analytics 4 is crucial for optimizing your website. This guide dives deep into the world of experimentation, from setup and analysis to advanced techniques and integration with other Google products. We’ll explore how to leverage data-driven insights to improve user experience and drive conversions.

Discover how to use A/B testing in Google Analytics 4 to make data-informed decisions about your website. We’ll cover everything from understanding the fundamentals to implementing advanced strategies. Get ready to unlock the potential of your website’s performance through experimentation.

Introduction to A/B Testing in Google Analytics 4

A/B testing is a powerful technique used to compare two or more versions of a webpage or app element to determine which performs better. It involves showing different variations of a webpage or app feature to different groups of users and measuring the impact on key metrics. This process allows businesses to optimize their online presence and maximize user engagement.A/B testing in Google Analytics 4 (GA4) helps marketers understand how different designs, content, and features affect user behavior.

By analyzing data collected through GA4, businesses can identify the most effective approach to improve conversions, engagement, and overall user experience. This crucial data-driven approach is crucial in making informed decisions about website and app optimization.

Purpose of A/B Testing in GA4

A/B testing in GA4 aims to identify the best performing version of a specific element on a website or app. This is accomplished by systematically comparing variations to determine which yields the desired outcomes. Ultimately, this leads to improved user engagement, increased conversions, and higher overall profitability.

A/B testing in Google Analytics 4 is crucial for optimizing your website. But, before you dive into the nitty-gritty, remember to polish your writing. Using strong, impactful language is key, and checking out this blog post on 8 weak words you need to edit out of your next blog post can dramatically improve your content.

This will make your A/B testing reports clearer and more persuasive to your team, ultimately leading to better results.

Benefits of A/B Testing with GA4

Implementing A/B testing with GA4 provides numerous advantages. These benefits include:

- Improved User Experience: By testing different variations of website elements, businesses can identify which versions best meet the needs of their users. This iterative approach leads to a more intuitive and user-friendly experience.

- Increased Conversions: Identifying the most effective versions of website elements through A/B testing often leads to higher conversion rates. This translates into more leads, sales, and ultimately, increased revenue.

- Enhanced Engagement: A/B testing can uncover which variations of website elements foster greater user engagement, measured by metrics like time spent on site, page views, and click-through rates.

- Data-Driven Decisions: GA4’s integration with A/B testing allows for data-backed decisions regarding website and app optimization. This reduces reliance on assumptions and intuition, ensuring that improvements are based on demonstrable results.

Key Metrics and Dimensions in GA4 A/B Testing

A/B testing within GA4 relies on specific metrics and dimensions to evaluate the effectiveness of different variations. These include:

- Conversion Rate: The percentage of users who complete a desired action, such as making a purchase or filling out a form.

- Bounce Rate: The percentage of users who leave the website after viewing only one page.

- Engagement Rate: Measures the level of interaction users have with a website or app, including metrics like time spent on site, pages per session, and sessions.

- User Demographics: Information about users, such as age, location, and interests, to understand user behavior patterns and tailor experiences accordingly.

- Traffic Source: Where users are coming from, such as organic search, social media, or direct traffic, to optimize marketing efforts.

Comparison of A/B Testing in Universal Analytics and GA4

The following table highlights key differences between A/B testing in Universal Analytics and GA4:

| Feature | Universal Analytics | Google Analytics 4 | Key Differences |

|---|---|---|---|

| Experiment Tracking | Built-in, but less flexible and granular | Built-in and highly configurable | GA4 offers greater control and customization options. |

| Data Collection | Uses cookies for user identification. | Uses both cookies and device-based identifiers. | GA4 has a more robust approach to data collection, catering to the evolving digital landscape. |

| Data Analysis | Relies on standard reports. | Offers enhanced analysis capabilities via exploration of custom dimensions and metrics. | GA4 provides more advanced analytics options, empowering more in-depth insights. |

| Integration with other platforms | Less seamless integration | Seamless integration | GA4 has improved integrations, facilitating data-driven decisions across various platforms. |

Setting Up A/B Tests in Google Analytics 4

A/B testing in Google Analytics 4 (GA4) is a powerful tool for optimizing your website or app. By systematically comparing different versions of a page or feature, you can identify which performs better and improve user engagement and conversions. Understanding the nuances of setting up these tests is crucial for achieving meaningful results.Setting up A/B tests involves more than just randomly choosing two versions.

Careful planning and execution are essential to ensure the test yields reliable and actionable insights. This involves clearly defining your goals, understanding the different types of tests, and meticulously following the setup steps. By understanding the intricacies of control and variation groups, you can accurately measure the effectiveness of your changes.

Defining Clear Hypotheses and Objectives

A well-defined hypothesis and clear objectives are the foundation of a successful A/B test. Without them, it’s difficult to interpret the results and determine if the changes made have a positive impact. A hypothesis states a prediction about the effect of a change. Objectives should be specific, measurable, achievable, relevant, and time-bound (SMART). These objectives should align with the overall business goals.

Types of A/B Tests in GA4

GA4 supports various A/B test types. Some common ones include:

- Content variations: Testing different headlines, descriptions, calls to action, or images on a webpage.

- User experience (UX) tests: A/B testing the layout, navigation, or flow of a website or app to improve user experience and reduce friction.

- Conversion rate optimization (CRO) tests: A/B testing different versions of landing pages, forms, or other elements to increase conversions.

Each type of test focuses on a specific aspect of user engagement and conversion.

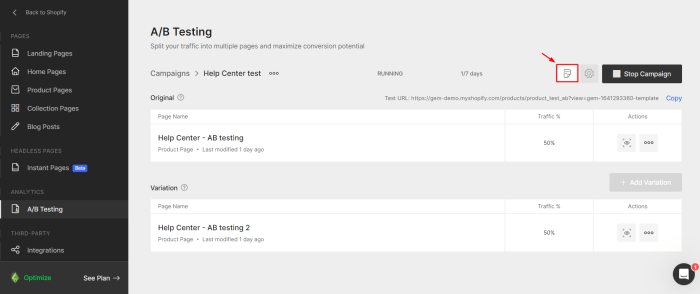

Creating an Experiment in GA4

This section Artikels the steps involved in creating an experiment in GA4.

- Define your experiment: Specify the variations you want to test, the metric you want to track, and the duration of the test. A well-defined experiment is crucial for reliable results. Consider what specific user actions you want to influence. This might involve a call to action button, a new headline, or a different image. Precisely defining the experiment’s purpose will help ensure accurate data collection.

- Create the experiment in GA4: Use the GA4 interface to set up the experiment, defining the control group and variation(s). GA4 provides tools for this purpose.

- Implement the experiment: The implementation involves modifying the website or app to reflect the variations you’ve created. Use the relevant tools and techniques to make the necessary changes to the content and layout. Proper implementation ensures the test runs smoothly and accurately.

Setting Up Control and Variation Groups

The following table Artikels the steps for creating a control group and a variation group in GA4.

A/B testing in Google Analytics 4 is crucial for optimizing your website’s performance. Understanding how different variations impact user behavior is key. To truly boost your website’s visibility and organic search rankings, explore valuable resources like 9 link building resources that’ll increase your search rankings. This will help you improve your website’s overall performance, ultimately leading to more effective A/B testing strategies in Google Analytics 4.

| Step | Description | Screenshot/Visual | Considerations |

|---|---|---|---|

| 1 | Define the control group and the variation(s). The control group represents the original version of the element being tested. The variation(s) are the alternative versions that you are testing against the control. Be explicit about what constitutes each version. | A screenshot of the GA4 interface showing the experiment setup with clearly labeled control and variation groups. | Ensure the variations are distinct and measurable. |

| 2 | Specify the metrics you want to track. Choose the key metrics that will be measured during the experiment. These might include clicks, conversions, or bounce rate. | A screenshot of the GA4 interface where you can define the tracked metrics. | Choose metrics that align with the objectives of the test. |

| 3 | Set the experiment duration. This will determine how long the test runs. Consider the typical user behavior on the website. | A screenshot of the GA4 interface where the duration of the experiment is set. | Consider the time needed for the experiment to yield statistically significant results. |

| 4 | Start the experiment and monitor the results. The results will be displayed in the GA4 interface. Analyze the data to determine which variation performs better. | A screenshot of the GA4 interface showing the progress of the experiment and the initial results. | Be prepared to adjust the experiment based on early results if needed. |

Analyzing A/B Test Results in Google Analytics 4

Decoding the insights from your A/B tests in Google Analytics 4 is crucial for understanding which changes resonate with your users. This process involves more than just looking at numbers; it demands a critical eye and a structured approach to identify the true impact of your modifications. Understanding statistical significance is key to separating real improvements from random fluctuations.

Interpreting A/B Test Results

Interpreting A/B test results in Google Analytics 4 involves carefully evaluating the performance of variations against the original version. The goal is to identify statistically significant differences that indicate a genuine impact on user behavior. A thorough analysis requires a deep dive into the specific metrics and dimensions that align with your test objectives.

Key Metrics and Dimensions

To effectively evaluate your A/B test outcomes, focus on metrics like conversion rate, bounce rate, session duration, and engagement rate. Also, consider dimensions such as user demographics, device type, and location. By combining these metrics and dimensions, you can gain a more comprehensive understanding of the impact of your changes on different user segments.

Statistical Significance

Statistical significance is a crucial element in A/B testing. It measures the probability that observed differences between variations are not due to random chance but rather a genuine effect of the changes you implemented. A p-value, typically below 0.05, is often used as a threshold for statistical significance. A lower p-value indicates a higher confidence that the observed differences are not random.

A p-value of 0.05 or less indicates that there’s a 5% or less chance that the observed results are due to random variation.

Identifying Statistically Significant Results

Identifying statistically significant results involves using Google Analytics 4’s reporting features and tools to determine the p-value associated with the differences between variations. Tools like statistical calculators or dedicated A/B testing platforms can aid in calculating this value. It’s essential to remember that a statistically significant result doesn’t always mean a practically significant one. Consider the magnitude of the difference alongside the statistical significance.

Organizing Key Insights

The following table presents a structured format for organizing key insights from an A/B test, combining metrics, their implications, and recommendations:

| Metric | Description | Impact on User Experience | Recommendations |

|---|---|---|---|

| Conversion Rate | Percentage of users who complete a desired action (e.g., making a purchase). | Higher conversion rates indicate improved user engagement and effectiveness of the design change. | If significant increase, maintain the winning variation. If no significant change, revert to original or test further. |

| Bounce Rate | Percentage of users who leave the site after viewing only one page. | A lower bounce rate suggests higher user engagement and satisfaction with the new design. | If significant decrease, maintain the winning variation. If no significant change, revert to original or test further. |

| Session Duration | Average time spent by users on the site. | Longer session duration indicates that users are finding the site more engaging. | If significant increase, maintain the winning variation. If no significant change, revert to original or test further. |

| Engagement Rate | Percentage of users who interact with the site beyond initial view. | Higher engagement rates demonstrate increased user interest and satisfaction. | If significant increase, maintain the winning variation. If no significant change, revert to original or test further. |

Best Practices for A/B Testing in Google Analytics 4

A/B testing in Google Analytics 4 (GA4) is a powerful tool for optimizing website performance. By systematically comparing different versions of a webpage or feature, you can identify which performs better in terms of user engagement and conversion rates. However, successful A/B testing requires a thoughtful approach. Following best practices ensures that your tests are robust, reliable, and yield actionable insights.Effective A/B tests are carefully designed and executed.

Understanding the key best practices can significantly improve the quality of your results, preventing wasted resources and ensuring that your efforts lead to tangible improvements in your website or app.

Designing Effective A/B Tests

A well-designed A/B test is crucial for obtaining reliable results. This involves careful consideration of the variations being tested and the metrics used for evaluation. A good test will clearly define the objective, choose appropriate variations, and select meaningful metrics. Don’t introduce too many changes in a single test, as it can make it difficult to isolate the cause of any observed improvement or decline.

- Focus on a single variable: Modify only one element at a time to pinpoint the specific change responsible for any observed differences in user behavior. Testing multiple changes simultaneously can obscure the results, making it difficult to isolate the impact of each alteration. For instance, if you want to improve the button’s click-through rate, modify only the button’s color, not the button’s text or the surrounding layout.

- Create realistic variations: The variations should be realistic and relevant to the user experience. Avoid drastic changes that disrupt the user flow or introduce unnecessary complexity. For example, instead of a complete redesign of a landing page, try changing the headline or call-to-action button. Real-world tests demonstrate that minor changes often lead to significant improvements.

- Avoid too many changes: Keep the number of variations manageable to ensure clarity and focus. If you test too many variations, it can dilute the impact and make it harder to draw conclusive results. Testing one element at a time, like a single button color or headline, will give a more accurate representation of user engagement.

Importance of Sufficient Sample Size

A crucial aspect of A/B testing is ensuring a sufficient sample size to achieve statistically significant results. Without enough data points, the results may be unreliable and misleading. The required sample size depends on the expected effect size and the desired level of confidence.

A/B testing in Google Analytics 4 is super helpful for optimizing your online presence. For instance, if you’re looking to boost your YouTube engagement, checking out these 4 quick wins to increase your YouTube engagement can be a game-changer. 4 quick wins to increase your YouTube engagement will give you actionable tips. Ultimately, A/B testing in Google Analytics 4 helps you refine your strategy and get the most out of your efforts.

- Achieve statistical significance: A sample size that is too small can lead to inconclusive results, and a sample size that is too large can be wasteful. Tools are available to calculate the appropriate sample size based on your desired confidence level and expected effect size. This ensures that your test results are reliable and provide a clear indication of the impact of the changes.

- Consider expected effect size: The anticipated difference between the variations will influence the required sample size. If you anticipate a significant difference, a smaller sample size might suffice, but if you expect a minor change, a larger sample size is often needed. A good example is A/B testing a button color; if you expect a minor difference, a larger sample size will be needed.

- Use statistical power analysis: Employ statistical power analysis tools to determine the minimum sample size needed to detect a statistically significant difference between variations. This ensures that the test is designed with a sufficient sample size to yield reliable results. This analysis helps in determining the right sample size, balancing between accuracy and resources.

Avoiding Common Mistakes in A/B Testing

Common mistakes in A/B testing can lead to inaccurate conclusions and wasted effort. By understanding and avoiding these pitfalls, you can ensure the reliability and validity of your results.

- Ignoring confounding variables: External factors can influence the results of an A/B test, and it’s important to consider and control for these factors. For example, seasonal variations in user behavior can skew results, making it necessary to conduct tests during consistent periods.

- Running tests too soon: Avoid rushing the analysis of the test data. Allow sufficient time for a substantial sample size to accumulate, which will ensure a statistically significant outcome. Don’t make conclusions prematurely. Waiting for a sufficient sample size is important for accuracy.

- Analyzing data prematurely: Avoid analyzing data before the test has run long enough to reach statistical significance. Premature analysis can lead to incorrect conclusions and wasted resources. Analyze data only after reaching the necessary sample size.

Factors Affecting A/B Test Validity

Several factors can influence the validity of A/B test results. Understanding these factors helps you design more robust tests and interpret results accurately.

- External factors: External factors like website traffic fluctuations or changes in user behavior outside the scope of the test can impact the results. Therefore, it’s crucial to consider these factors when designing and interpreting A/B tests. For example, if a major marketing campaign occurs during the test, it may affect user behavior and skew the results.

- Testing during consistent periods: Conducting tests during consistent periods with similar traffic patterns and user behaviors will provide more accurate results. Avoid conducting tests during periods of high traffic variability or significant external events. This ensures that the results are not skewed by external influences.

- Data quality and integrity: Data quality plays a vital role in A/B testing. Ensure that the data collected is accurate and reliable. Poor data quality can lead to incorrect conclusions. Data should be thoroughly cleaned and validated to ensure accuracy.

Advanced A/B Testing Techniques in Google Analytics 4

A/B testing, while powerful for identifying small improvements, often falls short when dealing with complex user journeys and interactions. Advanced techniques, such as multivariate testing and in-depth funnel analysis, provide a more comprehensive understanding of user behavior and allow for more nuanced optimization strategies. This deeper dive into user experience can uncover previously unseen patterns and lead to more significant improvements.

Multivariate Testing in Google Analytics 4, A b testing in google analytics 4

Multivariate testing (MVT) goes beyond A/B testing by allowing experimentation with multiple variations of multiple elements simultaneously. This method explores the impact of different combinations of changes on key metrics. Instead of testing a single element, it tests different variations of multiple elements together, such as button colors, call-to-action text, and page layouts. This enables a more comprehensive understanding of how different elements interact to affect user behavior.

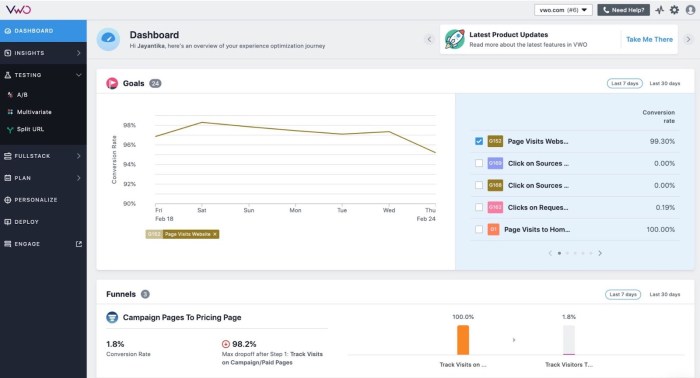

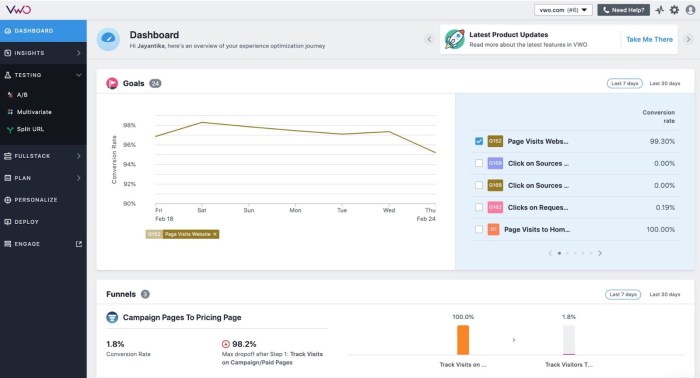

Funnel Analysis in A/B Testing

Funnel analysis provides a visual representation of user journeys through a specific process, like the checkout process in e-commerce. By analyzing this funnel, we can pinpoint drop-off points in the user experience where conversions are lost. Integrating this analysis with A/B testing allows for a targeted optimization approach to improve conversions at each stage of the funnel. For instance, if drop-off is high on the shipping page, testing different shipping options or presentation can lead to improvements.

Identifying and addressing these bottlenecks is crucial for maximizing conversions.

Advanced A/B Testing Use Cases in E-commerce

E-commerce presents unique opportunities for A/B testing. Testing different product presentation styles, including image variations, descriptions, and call-to-actions, can significantly impact sales. Another example is testing different payment gateway options or order confirmation emails to see which design and content result in higher conversion rates and customer satisfaction. For instance, A/B testing different product filters to see which leads to more product views and purchases.

Comparison of Multivariate and A/B Testing

| Technique | Description | Application | Benefits |

|---|---|---|---|

| A/B Testing | Tests the impact of two different variations of a single element. | Identifying optimal variations for individual elements, such as button colors, headlines, or calls-to-action. | Simple to implement, quick results, focused on specific elements. |

| Multivariate Testing | Tests multiple variations of multiple elements simultaneously. | Identifying the optimal combination of variations for multiple elements affecting user experience, such as page layouts, product descriptions, and call-to-action buttons. | Provides a comprehensive understanding of element interactions, potentially leading to significant improvements in conversion rates. |

Integrating A/B Testing with Other Google Products

A/B testing in Google Analytics 4 is powerful, but its effectiveness multiplies when integrated with other Google products. This synergy allows for a holistic view of your marketing efforts, enabling more informed decisions and optimized campaigns. Connecting your testing results with other data sources reveals the full impact of your changes and allows for a more granular understanding of user behavior.By integrating A/B testing with other Google products, you can analyze the results in the context of broader marketing strategies, understand the user journey more comprehensively, and ultimately achieve more impactful results.

Integrating with Google Tag Manager

Google Tag Manager (GTM) is crucial for A/B testing. It allows you to deploy and manage tags for your experiments without modifying the underlying website code. This separation is vital for maintaining website integrity while enabling flexible and scalable testing. Using GTM with A/B testing enables efficient tracking of experiment variables and ensures that the correct data is captured for analysis.

Linking A/B Testing Results to Other Marketing Data

Connecting A/B testing results with other marketing data sources like Google Ads or your CRM offers a richer understanding of the impact of changes. This holistic view reveals how different marketing channels interact and contribute to the overall conversion rate. For instance, you might discover that a specific A/B test variation performs exceptionally well when paired with a particular ad campaign on Google Ads.

This allows you to tailor your strategies for maximum impact across multiple channels.

Utilizing Google Optimize for A/B Testing Campaigns

Google Optimize is a dedicated A/B testing platform integrated with Google Analytics 4. It provides a user-friendly interface for creating and managing experiments. You can set up variations, track key metrics, and analyze results directly within the Optimize platform. This streamlined workflow facilitates rapid iteration and testing of different website elements. Combine this with Google Analytics 4 to gain a comprehensive understanding of the impact of your changes on user behavior across the entire website.

Integrating A/B Testing Results with Google Ads

A powerful integration is achieved by linking A/B testing results with Google Ads. This enables you to tailor your ad campaigns based on the winning variations of your experiments. If a specific webpage variation increases conversions, you can adjust your ad copy or targeting criteria to direct more qualified traffic to that version. For instance, if an A/B test shows that a new headline significantly improves click-through rates, you can incorporate that headline into your Google Ads campaigns.

This integration streamlines your marketing efforts by ensuring that your ad spend aligns with the most effective website elements.

Diagram: Integrating A/B Testing Results with Google Ads

The diagram (represented as an image placeholder) would visually depict the flow of data. It would start with an A/B test in Google Optimize, which then feeds data into Google Analytics 4. From there, a segment of the data, such as conversion rates or bounce rates for a specific variation, is then passed to Google Ads. This allows Google Ads to adjust its targeting or ad copy based on the performance of the different variations in the A/B test.

This iterative process continually optimizes your ad campaigns and website performance.

Conclusive Thoughts: A B Testing In Google Analytics 4

In conclusion, A/B testing in Google Analytics 4 is a powerful tool for website optimization. By understanding the setup, analysis, and best practices, you can effectively improve user experience and achieve significant results. This comprehensive guide provides a solid foundation for implementing effective A/B testing strategies. Experimentation is key, and by following this guide, you can make informed decisions based on data, leading to a more successful online presence.