Avoiding short term duplicate content issues is crucial for maintaining a healthy website and good search engine rankings. Duplicate content, whether near-identical pages or copied material, can significantly hurt your visibility. This post delves into understanding the problem, exploring prevention strategies, and demonstrating methods for detection and remediation. We’ll cover best practices for content management systems (CMS), technical implementation, and ongoing monitoring for optimal results.

Understanding the various forms of duplicate content, from temporary promotion pages to site migrations, is the first step. This includes recognizing the potential impact on search engine rankings, which can lead to decreased visibility and lower organic traffic. The table illustrating scenarios, impacts, and solutions helps visualize the risks and solutions. We’ll cover techniques like canonical tags, redirects, and robust content creation processes.

Proper implementation is key for mitigating these risks.

Understanding the Problem: Avoiding Short Term Duplicate Content Issues

Duplicate content, especially in the short term, can significantly harm a website’s search engine rankings. It confuses search engines, leading them to struggle in determining which version of the content is most relevant to users. This ultimately results in reduced visibility for the site and decreased organic traffic. Understanding the various forms of short-term duplicate content and its consequences is crucial for maintaining a healthy online presence.

Defining Short-Term Duplicate Content

Short-term duplicate content refers to instances where identical or near-identical content appears on a website within a relatively short period. This can include temporary content changes, like promotional pages, or content that is temporarily copied from another site. The critical aspect is the

temporal* nature of the duplication; the issue isn’t inherent to the content itself but to its presence at different points in time.

Forms of Duplicate Content

Duplicate content can manifest in several ways on a website. Near-identical pages are a common issue. For example, if a product page has a minor variation for a specific promotion, that can be seen as a near-duplicate. Another instance involves copying content from external sources. A website might temporarily use content from another site, perhaps during a temporary promotional period or a lack of in-house content, and this temporary reuse can create duplicate content.

Content snippets from various parts of a site appearing in different locations also count as a form of duplication.

Impact on Search Engine Rankings

Search engines use complex algorithms to determine the relevance and authority of web pages. When they encounter multiple instances of the same or similar content, they face difficulty in identifying the most authoritative source. This ambiguity can lead to lower rankings for all the duplicate pages, reducing their visibility in search results. Essentially, search engines are less likely to display pages that are deemed less authoritative and more likely to have duplicates, even if the duplicates are only present temporarily.

Common Causes of Short-Term Duplicate Content

Several factors can contribute to short-term duplicate content issues. Temporary content changes, such as promotional landing pages or seasonal sales, often involve near-duplicate content. Site migrations, even temporary ones, can lead to instances of duplicated content if not properly managed. Content management system (CMS) issues, like incorrect caching or indexing, can also cause short-term duplicates.

Scenario Comparison Table

| Scenario | Description | Impact on Search Rankings |

|---|---|---|

| Temporary Content Changes | Example: A promotion page added for a short period, a landing page for a limited-time offer, or a page containing content from another source for a limited time. | Potential decrease in visibility for related searches. The search engine may struggle to identify the most relevant version, resulting in a lower ranking for all related pages. |

| Site Migrations (Temporary) | A website undergoing a short-term migration might temporarily display the old content alongside the new content. | Potential for temporary rank fluctuations, as the search engine identifies multiple versions of the same content, causing confusion in identifying the correct source. |

| Content Syndication (Temporary) | Temporarily re-posting content from another source on a website for promotional purposes. | Potential for decreased visibility for related content, as the search engine might perceive it as duplicate content, especially if the content is not properly attributed or adapted. |

Prevention Strategies

Avoiding short-term duplicate content is crucial for maintaining a healthy website and avoiding penalties from search engines. Effective prevention strategies are vital for maintaining a positive online presence and building trust with users. This section will detail key methods to avoid accidentally duplicating content during site updates and maintenance.Implementing robust content creation processes and early detection mechanisms are essential to mitigating the risk of duplicate content issues.

Proactive measures can save significant time and resources compared to dealing with duplicate content problems later on.

Content Creation Process

A structured content creation process significantly reduces the risk of unintentional duplication. This involves a clear workflow, consistent guidelines, and a dedicated content calendar to manage publishing schedules. Clear roles and responsibilities for content creation and review should also be defined to minimize ambiguity and ensure quality control. A content audit should be performed regularly to ensure that content is unique and accurately reflects the intended message.

Preventing Duplication During Updates

Preventing duplication during site updates or maintenance requires careful planning and execution. Employing a staging environment allows for testing updates and revisions without affecting the live site. This isolates potential duplicate content issues before they reach the public. Version control systems for content, like Git, can also track changes and help prevent unintentional duplication by allowing for easy rollback to previous versions.

Using Canonical Tags

Canonical tags are a crucial tool for managing duplicate content. They signal to search engines which version of a page is the preferred one, helping to avoid issues where search engines index multiple identical pages. Properly implemented canonical tags direct search engines to the intended, authoritative version of the page, thereby preventing indexing of duplicate content. For example, if you have a product page available in multiple languages, use canonical tags to point search engines to the main English version of the page.

Identifying Duplicates Early

Implementing early detection mechanisms to identify duplicate content is essential. Regular content audits, using tools designed for duplicate content detection, should be a routine part of the site maintenance schedule. This helps in identifying potential issues before they negatively affect search rankings. Tools can help identify instances of identical or near-identical content across different pages. By using these tools, potential problems can be addressed early in the development process, ensuring a smooth and effective rollout of new content.

Examples of Using Canonical Tags

To illustrate the practical application of canonical tags, consider a scenario where a blog post is published in both English and Spanish. The English version is the primary source and the authoritative one. The Spanish version would include a canonical tag pointing to the English version, thus preventing search engines from indexing two identical pages. This ensures that search engines index the authoritative content and the user experience remains consistent across different languages.

Detection and Remediation

Short-term duplicate content issues, while often stemming from unintentional mistakes or technical glitches, can quickly damage a website’s search engine rankings and user experience. Addressing these problems promptly is crucial for maintaining a healthy online presence. This section delves into methods for detecting and rectifying such issues.Effective detection and remediation strategies are essential for maintaining a website’s integrity and search engine visibility.

A proactive approach to identifying and resolving duplicate content prevents significant damage to and user trust.

Methods for Detecting Duplicate Content

Identifying duplicate content requires a systematic approach. Automated tools and manual checks are valuable resources for detecting issues, and using a combination of methods enhances the likelihood of finding problems.

- Using Auditing Tools: Specialized tools provide comprehensive reports on website content. These reports often highlight instances of duplicate content, identifying pages with similar or identical text. Tools like SEMrush, Ahrefs, and Moz offer such functionalities, assisting in identifying potential duplicate content issues across various pages on a website. Regular use of these tools allows for proactive identification and remediation of problems.

- Content Similarity Analysis: Specialized software analyzes website content for similarities. This software compares the text on different pages and flags those that share substantial overlaps. These tools often use sophisticated algorithms to detect nuanced similarities, ensuring accurate identification even when exact matches are not evident. This approach is crucial in catching duplicate content that might not be entirely identical but still harms .

- Manual Content Review: While automated tools are beneficial, a thorough manual review remains essential. Manually comparing related pages helps identify instances of unintended duplication, ensuring that no crucial content overlap is overlooked. This approach is especially helpful in identifying subtle differences that automated tools might miss, ensuring a comprehensive detection process.

Structured Process for Identifying Short-Term Duplicate Content

A structured process streamlines the identification and remediation of short-term duplicate content issues. A clear sequence of steps ensures that the problem is tackled systematically.

- Identify Potential Duplicates: Using the aforementioned methods, pinpoint pages exhibiting signs of content similarity. This initial step involves using a combination of automated and manual processes.

- Analyze Content Similarity: Carefully examine the identified pages for any similarities. Consider the context, the content’s meaning, and its overall presentation.

- Determine Origin of Duplication: Investigate the source of the duplicate content. Determine whether it stems from content copying, accidental duplication during content updates, or other technical reasons. Understanding the origin is key to choosing the correct remediation strategy.

- Prioritize Remediation: Evaluate the severity of each identified duplicate content issue. Prioritize fixes based on the impact on search engine rankings and user experience. This will ensure the most important issues are tackled first.

Tools and Techniques for Finding Duplicate Content, Avoiding short term duplicate content issues

A variety of tools and techniques aid in detecting duplicate content. A combination of these approaches enhances the efficiency and accuracy of the detection process.

- Copyscape: This popular tool helps identify instances of copied content on the web. It compares a page’s content against a vast database of other websites to pinpoint potential duplicates.

- Siteliner: This tool offers a comprehensive website analysis, highlighting issues like duplicate content and other concerns. It helps identify areas of overlap and duplication within a website’s content.

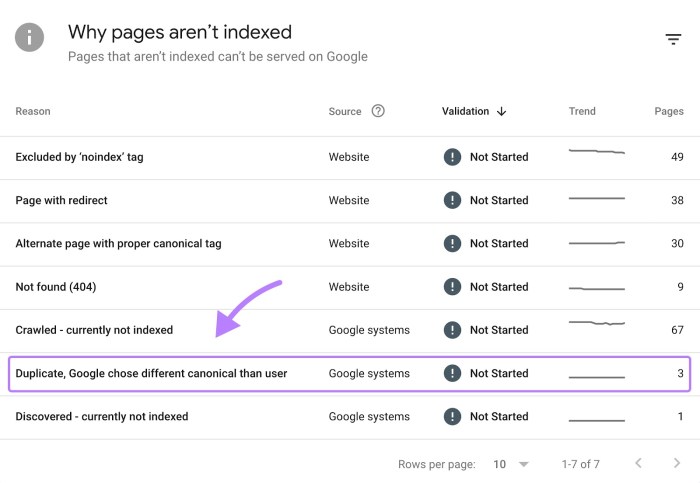

- Google Search Console: This free tool from Google provides valuable insights into how search engines view a website. It can flag issues like duplicate content, helping website owners identify problems early.

Fixing Identified Duplicate Content Problems

Once duplicate content is identified, implementing appropriate solutions is crucial. This section Artikels various approaches to resolve short-term issues effectively.

- Canonicalization: Use canonical tags to signal to search engines which version of a page is the primary one. This helps avoid indexing issues and ensures that search engines understand the correct version of the page.

- Redirection: Redirect users and search engines to the preferred version of a page using 301 redirects. This ensures that users and search engines are directed to the correct content and preserves the link equity.

- Content Modification: If possible, modify the duplicate content to make it unique. Adding new information, rewriting sections, or changing the presentation can effectively resolve duplication issues.

Comparing Solutions for Duplicate Content Issues

Several methods exist for resolving duplicate content issues. Choosing the most suitable method depends on the specifics of the duplication.

| Method | Description | Pros | Cons |

|---|---|---|---|

| Canonicalization | Specifies the primary version of a page | Simple, effective for | May not be suitable for all cases |

| Redirection | Redirects users and search engines to the preferred version | Preserves link equity | Requires proper implementation to avoid issues |

| Content Modification | Creating unique content | Effective long-term solution | Time-consuming and might not be feasible in all cases |

Content Management System (CMS) Best Practices

Successfully managing content, especially in a fast-paced digital environment, requires robust strategies to prevent short-term duplicate content issues. Implementing best practices within your Content Management System (CMS) is crucial for maintaining a clean and optimized online presence. These best practices not only enhance search engine visibility but also safeguard your brand reputation.Effective CMS management goes beyond simply uploading content.

It necessitates proactive measures to identify and avoid potential duplicate content issues, particularly when dealing with temporary content updates, revisions, or staging areas. By understanding and applying these strategies, you can ensure your content is always unique and well-managed within your chosen CMS.

Content Creation and Update Guidelines

Implementing strict guidelines for content creation and updates within a CMS is fundamental to avoiding duplicates. These guidelines should be readily accessible and consistently enforced by all content creators. This approach fosters a uniform approach to content management and reduces the risk of unintentional duplication.

- Version Control: Employ a robust version control system within your CMS. This allows for tracking changes, comparing different versions, and reverting to previous states if necessary. It significantly minimizes the risk of accidental duplication when editing content.

- Content Staging: Implement a dedicated staging area for new or revised content before publishing. This area should be distinct from the live content, ensuring no accidental merging or publishing of duplicate content.

- Content Templates: Leverage templates to standardize content structure and formatting. Using consistent templates reduces variations and accidental duplication of content structure.

- Content Approval Process: Establish a content approval process. This step involves careful review of content before publication. This allows for a final check to identify and eliminate duplicate content or errors.

- Clear Content Guidelines: Create and distribute clear guidelines for content creators regarding formatting, style, and best practices. This approach ensures a consistent content approach and reduces potential for unintentional duplicates.

Managing Temporary Content Changes

Temporary content changes, such as A/B testing or short-term promotions, can lead to duplication if not handled carefully. Understanding the best practices for managing temporary changes is crucial to prevent these issues.

- Staging Environment Use: Utilize the CMS’s staging environment for all temporary changes. This isolates temporary content and prevents it from interfering with live content.

- Version Control Tracking: Track changes meticulously using the CMS’s version control system. This approach allows you to easily revert to previous versions if needed.

- Careful Publishing Procedures: Implement a precise publishing schedule for temporary content changes. This ensures changes are only released when intended.

- Duplicate Content Checks: Conduct rigorous duplicate content checks on the temporary content before publishing. This is a crucial step to prevent the introduction of duplicates into the live site.

Utilizing CMS Features for Duplicate Content Avoidance

Many CMS platforms offer features specifically designed to prevent duplicate content issues. Leveraging these functionalities can greatly improve content management efficiency.

Avoiding short-term duplicate content issues is crucial for SEO. One often overlooked aspect is the footer. Updating your footer text in your WordPress admin panel can help to ensure that different pages on your site aren’t inadvertently displaying the same content, which is a common cause of duplicate content problems. For example, learning how to change the footer in your WordPress admin panel, as detailed in this helpful guide how to change the footer in your wordpress admin panel , can significantly reduce this risk.

This, in turn, strengthens your site’s overall SEO strategy by avoiding the pitfalls of short-term duplicate content.

- Content Duplication Prevention Tools: Some CMSs have built-in tools to detect and prevent duplicate content. Look for these features within your platform.

- Permalink Management: Manage permalinks carefully. Ensure unique and descriptive URLs for each piece of content to avoid conflicts and potential duplication.

- Content Caching: Understand how your CMS handles content caching. Misconfigured caching can lead to the display of outdated or duplicate content.

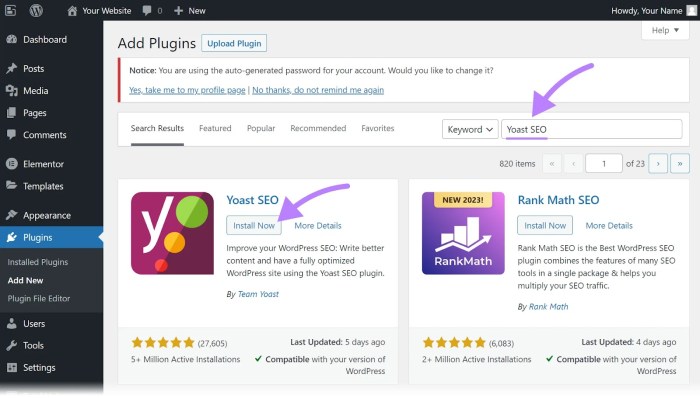

- Tools: Utilize tools within your CMS to optimize content for search engines. These tools can help identify potential duplicate content issues and offer solutions.

Technical Implementation

Dealing with temporary content changes and preventing duplicate content from harming your website’s search engine ranking requires a robust technical approach. This involves implementing strategies that manage redirects efficiently, prevent indexing of unwanted content, and maintain a clean, consistent site structure for search engines. Proper technical implementation ensures that search engines understand your site’s content and its evolution, avoiding confusion and penalties.

Redirects and Canonical Tags

Effective management of temporary content changes hinges on the strategic use of redirects and canonical tags. Redirects are crucial for guiding users and search engines to the correct, updated version of a page, while canonical tags help search engines understand the preferred version of a page among multiple potential duplicates. Implementing redirects and canonical tags correctly helps prevent issues like duplicate content penalties and maintains a smooth user experience.

Avoiding short-term duplicate content issues is crucial for maintaining a strong online presence. It’s not just about search engine rankings; it also connects to broader ethical considerations like social responsibility and marketing ethics. Social responsibility and marketing ethics emphasize the importance of original content creation, and by avoiding duplication, you’re fostering trust with your audience.

Ultimately, this approach is good for your brand in the long run, ensuring you avoid the pitfalls of short-term, questionable strategies.

Implementing Redirects

Redirects are essential for seamlessly transferring users and search engine crawlers to the new location of a page. Temporary content changes often necessitate redirects. Implementing the right redirect type is key to minimizing negative impact on search engine visibility.

- 301 Redirects: These are permanent redirects, signifying a permanent change in a page’s location. Use them for permanent relocations, such as when a page has been moved to a new URL permanently. They effectively pass link equity to the new location, helping maintain search engine rankings. For example, if a product page is moved to a new URL, a 301 redirect ensures that search engines and users are directed to the new location, preserving the previous page’s value.

- 302 Redirects: These are temporary redirects, used when the content change is anticipated to be temporary. Use these for redirecting to a page that will soon be updated, such as during maintenance or for promotional periods. While they still redirect, they carry less weight in terms of passing link equity compared to 301s. For instance, a page under maintenance would use a 302 redirect, guiding users to a temporary message while the page is being updated.

Avoiding short-term duplicate content issues can be a real headache, especially when you’re constantly updating your WordPress site. One way to help manage this is to set up email notifications for post changes. This will let you know instantly if a post is updated, potentially preventing accidental duplicates. Knowing how to get email notifications for post changes in WordPress is key to keeping your content fresh and unique.

This can be really helpful in avoiding those short-term duplicate content issues. how to get email notifications for post changes in wordpress Having these notifications set up can be a lifesaver in the long run.

This type of redirect conveys to search engines that the page’s location is temporary.

Canonical Tags

Canonical tags are HTML elements that specify the preferred version of a page when multiple URLs point to the same or very similar content. This helps search engines understand which version is the authoritative one, preventing duplicate content issues. They’re essential for handling scenarios with slight variations of content or URLs, like different product variations or similar articles.

- Implementation: A canonical tag is added to the ` ` section of the HTML document. The tag points to the preferred URL, instructing search engines to consider that version as the original. This helps consolidate link equity and avoid duplicate content penalties. This technique ensures that search engines index the preferred URL without penalizing the website for duplicate content.

Robots.txt

The robots.txt file is a crucial tool for controlling which parts of your website search engine crawlers can access. By strategically using this file, you can prevent indexing of unwanted or temporary content, thus avoiding issues with duplicate content.

- Disallowing Indexing: By specifying rules in the robots.txt file, you can instruct search engines to not crawl specific pages or directories. This is particularly useful for temporary content, like outdated pages or promotional materials that you want to keep offline. This proactive measure ensures that search engines don’t index and display outdated or temporary content.

Redirect Type Comparison

The following table illustrates the different redirect types and their impact on search engine visibility.

Monitoring and Reporting

Staying ahead of duplicate content issues requires a proactive approach that goes beyond initial prevention. Effective monitoring and reporting are crucial for identifying and addressing problems quickly, maintaining search engine rankings, and preserving brand reputation. This proactive approach involves setting up systems for constant monitoring, generating reports, and using analytics to measure the impact of any duplicate content.

Monitoring Strategies for Duplicate Content

Consistent monitoring of a website for duplicate content is essential for maintaining its health and search engine visibility. Several strategies can be implemented to achieve this goal. Regular crawls of the site, comparing the content of different pages, and checking for identical or near-identical content on external websites are all important components. These strategies need to be automated to be effective in catching issues quickly.

Alerting and Notification Systems

Setting up alerts and notifications is crucial for prompt responses to potential duplicate content problems. These systems should be triggered by the detection of significant similarities or identical content across pages or with external sites. A well-designed notification system can be configured to prioritize alerts based on the severity of the duplicate content issue, such as whether the issue is on a high-traffic page or affects core content.

This allows for a timely response to potentially damaging situations. For example, a notification should be sent to the website administrators immediately if a significant portion of the homepage is detected as being duplicated.

Data Collection and Reporting

Collecting data on duplicate content is crucial for understanding the scope of the problem and evaluating the effectiveness of solutions. A comprehensive reporting system should be designed to capture the URLs of duplicate content, the degree of similarity, the source of the duplicate content (if applicable), and the impact on site traffic and search engine rankings. This detailed data allows for a thorough analysis and the identification of trends.

A report should also show the number of pages affected, the percentage of duplicate content, and a ranking of affected pages by importance.

Using Analytics Tools for Impact Assessment

Analytics tools play a vital role in understanding the impact of duplicate content on website traffic and search engine rankings. Tools like Google Analytics and Search Console can be used to monitor changes in traffic patterns, rankings, and bounce rates after the implementation of duplicate content solutions. By tracking these metrics, website owners can gauge the effectiveness of their duplicate content solutions and identify areas for improvement.

This data helps in understanding the impact of the duplicate content on user engagement and the search engine’s perception of the site.

Metrics for Evaluating Duplicate Content Solutions

The effectiveness of implemented duplicate content solutions can be measured using various metrics. A table below demonstrates some key metrics for tracking success.

| Metric | Description | How to Measure | Target |

|---|---|---|---|

| Unique Content Percentage | Percentage of content on the site that is original and not duplicated. | Compare the amount of unique content to the total content. | High (e.g., >90%) |

| Duplicate Content Volume | Amount of duplicate content found on the site. | Track the number of pages affected by duplicate content. | Low (e.g., <5% of total pages) |

| Search Engine Ranking Fluctuation | Changes in search engine rankings after duplicate content mitigation. | Track rankings for target s before and after implementing solutions. | Stable or improved |

| Website Traffic Trends | Changes in website traffic after duplicate content mitigation. | Analyze website traffic data (e.g., unique visitors, bounce rate) before and after implementing solutions. | Stable or improved |

| Crawl Rate | The frequency with which search engine crawlers visit the website. | Monitor crawl rate data using Google Search Console. | High |

Final Review

In summary, avoiding short-term duplicate content issues requires a multifaceted approach. From proactively preventing duplicates during updates to effectively detecting and remediating existing problems, this comprehensive guide provides actionable steps. Mastering CMS best practices, technical implementations like redirects and canonical tags, and consistent monitoring are vital for maintaining a strong online presence. By following these strategies, you can safeguard your site’s health and enhance its search engine visibility.

Ultimately, preventing duplicate content is a critical aspect of maintaining a high-quality website.