Google Automatic Marketing Content Extraction unlocks a powerful way to gather valuable marketing insights. This process allows businesses to automatically extract marketing content like blog posts, product descriptions, and social media updates from Google search results, Google My Business listings, and more. Understanding the different types of content, extraction methods, and potential applications is key to harnessing the full potential of this technique.

From web scraping to APIs and machine learning, various methods offer different advantages and disadvantages. This exploration delves into the practicalities of choosing the right approach for specific needs, examining the potential pitfalls, and highlighting essential considerations for ethical and legal compliance.

Defining Google Automatic Marketing Content Extraction

Unveiling the power of automated systems to sift through Google’s vast digital landscape and extract valuable marketing content is a game-changer for businesses. This technology offers a streamlined approach to gather and organize data for strategic marketing initiatives. Imagine having access to a treasure trove of relevant information, instantly and effortlessly, without the need for manual labor. This automated extraction allows for faster insights, enhanced efficiency, and better informed decision-making.This process involves leveraging algorithms and machine learning models to identify and categorize marketing content scattered across various Google platforms.

Google’s automatic marketing content extraction tools are pretty cool, but optimizing your content for social media is key to success. Understanding the ideal content length and posting frequency, as discussed in detail in this helpful guide on content length posting frequency social media , directly impacts how well your extracted content performs. Ultimately, effective use of Google’s tools, combined with a strategic social media approach, will be crucial for maximizing your marketing results.

It essentially acts as a digital assistant, meticulously searching and sorting through information to provide businesses with the specific marketing data they need. This automation can dramatically reduce the time and resources needed to analyze marketing efforts and understand consumer trends.

Types of Extracted Marketing Content

A wide array of marketing content can be extracted, each providing unique insights into consumer behavior and market trends. This includes not only readily available content like blog posts and product descriptions, but also encompasses less obvious sources like social media updates and customer reviews. The process captures details from various sources, allowing for a comprehensive understanding of the marketing landscape.

- Blog Posts: These provide valuable insight into industry trends, customer concerns, and company viewpoints. They offer a wealth of information for understanding customer needs and building brand awareness.

- Product Descriptions: These detailed explanations of products offer crucial data points about features, benefits, and pricing strategies. This helps businesses understand the value proposition of their products.

- Social Media Updates: These represent real-time conversations and engagement, reflecting customer sentiment and current trends. This real-time data provides a fast snapshot of public perception.

- Customer Reviews: These are invaluable feedback sources. They provide an unfiltered perspective on products and services, allowing businesses to identify areas for improvement.

Sources of Extracted Content on Google

The range of sources for extracting marketing content is broad, encompassing many areas within Google’s ecosystem. This automated approach allows businesses to tap into various data points.

- Search Results: Extracted content from search results can offer valuable information on competitor strategies, emerging trends, and consumer demands. This data helps businesses understand what information is important to their target audience.

- Google My Business Listings: These business profiles contain essential details about services, locations, and customer reviews. This information allows for a complete understanding of local market presence and customer interactions.

- Google News: News articles and reports related to the industry or target market can offer a broad understanding of current events and their impact on the industry. This helps businesses stay ahead of the curve and respond to changing circumstances.

Formats for Extracted Content

The extracted content can be delivered in various formats to best suit the needs of the user. Different formats allow for easy integration and analysis of the data.

- Text: Simple text format is easy to process and analyze, allowing for rapid understanding of key themes and sentiments. It is suitable for basic analysis and trend identification.

- HTML: This format allows for the extraction of structured data from web pages, including images and links. This is particularly useful for analyzing content format, style, and visual elements.

- JSON: This structured format provides a well-organized way to store and retrieve data. This allows for easy manipulation and analysis using programming tools and databases.

Classifying Extracted Content Types

A robust framework is needed to categorize the extracted content effectively. This structure helps businesses organize and analyze the data efficiently. A simple framework could be based on the following criteria.

| Category | Description |

|---|---|

| Product Information | Details about specific products, including features, benefits, and pricing. |

| Market Trends | Information about industry trends, competitor activities, and consumer behavior. |

| Customer Feedback | Customer reviews, ratings, and comments related to products or services. |

| Company Information | Details about the company, including its mission, values, and recent activities. |

Methods for Content Extraction

Extracting valuable information from the vast ocean of online data is crucial for businesses and researchers. Automatic content extraction from Google search results streamlines this process, saving time and effort. Understanding the different methods available is essential for selecting the best approach for specific needs. Choosing the right method depends on the nature of the content and the desired outcome.Various techniques exist for automatically extracting content from Google search results.

These range from simple web scraping to sophisticated machine learning algorithms. Each approach possesses unique advantages and disadvantages, making careful consideration necessary. The efficiency and reliability of the chosen method directly impact the accuracy and completeness of the extracted data.

Common Content Extraction Techniques

Different methods are available for extracting content automatically from Google search results. Web scraping, APIs, and machine learning are among the most common approaches. Each technique has its own strengths and weaknesses.

- Web Scraping: This method involves automatically downloading and parsing HTML code from web pages. It’s a versatile technique capable of handling various content types, including structured and unstructured data. However, it can be slow and unreliable due to website changes and the potential for blocking by the site’s robots.txt file.

- APIs: Application Programming Interfaces (APIs) provide a structured and controlled way to access data. These APIs are designed for specific purposes and often offer greater reliability and speed compared to web scraping. However, they typically have limited scope and may require subscriptions or usage fees. For instance, Google’s Search API allows programmatic access to search results, offering structured data.

- Machine Learning: Machine learning algorithms can be trained to identify and extract specific patterns and information from text. This approach can be more effective in extracting complex or nuanced information compared to simpler methods. However, training machine learning models requires significant computational resources and a large dataset, making it a more complex and resource-intensive approach. Example use cases include extracting key phrases, sentiments, and entities from news articles.

Choosing the Right Method

The optimal extraction method depends on the specific task and the type of content. Factors to consider include the structure of the data, the desired extraction depth, the frequency of data retrieval, and the budget.

| Method | Content Type | Pros | Cons |

|---|---|---|---|

| Web Scraping | HTML, Unstructured Data, Dynamically Generated Content | Versatile, Relatively Easy to Implement | Slow, Inconsistent Data, Potential for Errors, Risk of getting blocked by websites |

| APIs | Structured Data, Specific Data Sets | Reliable, Fast, Controlled Access | Limited Scope, Potential for high costs, Often Requires Subscriptions |

| Machine Learning | Complex Data, Nuances, Patterns, Sentiment Analysis | Highly Accurate, Adaptable, Scalable | High Computational Cost, Requires Significant Data, Complex Implementation |

Example Scenarios

Consider a scenario where you need to extract product reviews from e-commerce sites. Web scraping might be a good initial choice, but its unreliability necessitates monitoring and handling potential website changes. Alternatively, if you need to extract structured data like product specifications from a website with an API, using an API is likely a better option. For a more nuanced task like identifying trends in public opinion from news articles, machine learning algorithms would be the best approach.

Applications of Extracted Content

Google’s automatic marketing content extraction tools provide a wealth of data that can significantly enhance marketing campaigns. By intelligently identifying key information from various sources, these tools empower marketers to create more targeted, engaging, and effective strategies. This data-driven approach allows for greater efficiency and improved ROI.This detailed exploration will delve into the diverse applications of extracted content, demonstrating its value in content creation, personalization, audience targeting, competitive analysis, and market research.

The ability to quickly and accurately gather insights from a vast pool of information empowers marketers to make informed decisions and execute more effective campaigns.

Content Creation

Extracted content facilitates the repurposing of existing information into fresh, new formats. This streamlined process reduces the time and effort needed for content creation, allowing marketers to quickly adapt and optimize their campaigns. For instance, a product description can be easily transformed into compelling social media posts or blog articles. This rapid transformation is particularly valuable in maintaining a consistent brand voice and message across different channels.

Personalization

Leveraging extracted content enables personalized marketing strategies that cater to individual customer needs and preferences. By identifying specific customer interests and behaviors, marketers can tailor their messaging and offerings. This approach not only increases customer engagement but also drives conversions by creating a more relevant and engaging experience. For example, extracted data from customer purchase history can be used to recommend related products or services.

Audience Targeting

The ability to identify and segment target audiences is greatly enhanced by extracted content. Marketers can now pinpoint specific demographics, interests, and behaviors to craft tailored campaigns that resonate deeply with the intended audience. This precision targeting leads to a higher likelihood of success and better conversion rates. For instance, understanding the language and cultural preferences of a target audience can guide content creation, improving campaign effectiveness.

Competitive Analysis

Extracted content provides valuable insights into competitor activities, strategies, and market positions. This data-driven approach enables a deeper understanding of the competitive landscape, facilitating the development of effective strategies for differentiation and growth. Marketers can gain a comprehensive understanding of competitor strengths, weaknesses, and pricing strategies, informing their own marketing efforts.

Google’s automatic marketing content extraction is a game-changer, but it’s just one piece of the puzzle. To really maximize your online presence, exploring strategies like those detailed in the 32 ways your ecommerce company can boost engagement and sales guide is crucial. Ultimately, leveraging both automated tools and targeted strategies will be key to crafting effective, engaging content for your marketing efforts.

Market Research

Automated content extraction tools are powerful instruments for gathering valuable market research data. Identifying market trends, emerging needs, and customer preferences becomes significantly easier. This approach empowers businesses to proactively adapt to evolving market dynamics and capitalize on emerging opportunities. For example, by analyzing social media conversations and news articles, businesses can quickly identify evolving consumer trends.

Summary of Marketing Applications

| Application | Use Case | Example |

|---|---|---|

| Content Creation | Repurposing content | Transforming product descriptions into social media posts |

| Personalization | Tailoring messaging to individual customers | Recommending products based on past purchases |

| Audience Targeting | Identifying and segmenting target audiences | Crafting campaigns based on demographics and interests |

| Competitive Analysis | Understanding competitor strategies | Analyzing competitor pricing and promotions |

| Market Research | Identifying market trends and customer preferences | Analyzing social media discussions about products |

Challenges and Limitations

Automatic marketing content extraction from Google presents several hurdles. While the technology holds promise, significant limitations exist in terms of data quality, accuracy, and ethical considerations. Understanding these challenges is crucial for developing robust and responsible extraction methods.The process of automatically extracting marketing content from Google’s vast and dynamic web environment is complex. Numerous factors influence the reliability and consistency of the extracted data, making it challenging to achieve perfect accuracy.

Moreover, ethical implications and legal restrictions further complicate the landscape.

Data Quality and Accuracy

The accuracy of extracted content hinges on the quality of the data source. Google’s search results are dynamic and constantly evolving. New information emerges, old pages get updated or removed, and content formatting changes, all of which can affect the accuracy and consistency of extracted information. For example, a website offering product information might update its pricing or product descriptions, making previously extracted data outdated or inaccurate.

Maintaining a consistent and accurate data set requires a robust mechanism for tracking and updating the information.

Consistency and Completeness

Consistency in the extraction process is paramount for reliable data. Variability in website structures, content formatting, and Google’s search algorithms can lead to inconsistent extraction results. For instance, different websites may use various HTML tags to structure their content. If the extraction process doesn’t account for this variability, the extracted content may be incomplete or misrepresented. This inconsistency is exacerbated by Google’s dynamic nature, as algorithm updates can alter search results, impacting the reliability of extracted content.

Ethical Considerations and Bias

Extracting content from Google inevitably involves handling large datasets. These datasets may contain biased information, reflecting societal biases present in the original content. For instance, if a significant portion of the extracted content promotes a particular viewpoint or perspective, the extracted dataset may inherit this bias. Moreover, the extraction process itself can inadvertently introduce bias if the algorithms used are not designed to mitigate these issues.

Google’s automatic marketing content extraction is a game-changer, right? It’s super helpful for quickly pulling together data for new campaigns, but what about those older blog posts collecting digital dust? Updating them with fresh insights and relevant keywords, like in update old blog posts , can dramatically boost their effectiveness. Ultimately, using this data to refresh your older content helps maintain a consistent flow of high-quality marketing material generated by Google’s tools.

Ethical considerations require careful attention to avoid amplifying or perpetuating any existing biases.

Legal Restrictions and Compliance

Web scraping, the process of automatically extracting content from websites, is subject to legal restrictions and compliance issues. Many websites have robots.txt files that prohibit or restrict automated access to their content. Violating these restrictions can result in legal repercussions. Additionally, copyright and intellectual property laws may apply to the extracted content, requiring careful consideration to avoid infringement.

For example, scraping copyrighted material without permission can lead to legal challenges. Companies need to comply with terms of service and respect website owners’ guidelines to avoid legal problems.

Strategies for Mitigating Challenges

To address these challenges, several strategies can be employed. Using robust data validation techniques can help identify and filter out inaccurate or inconsistent information. Regularly updating the extraction algorithms to adapt to changes in Google’s search results and website structures is essential. Developing strategies to detect and mitigate potential biases in the extracted data is crucial. Implementing measures to comply with legal restrictions and robots.txt guidelines can prevent legal issues.

Thorough testing and evaluation of the extraction process are vital for identifying and resolving any inconsistencies.

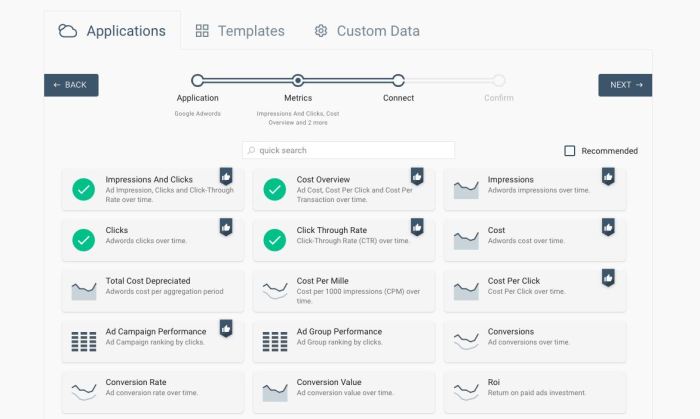

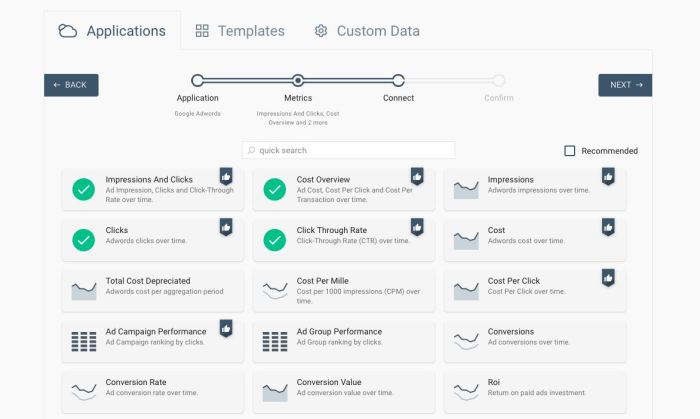

Tools and Technologies: Google Automatic Marketing Content Extraction

Unlocking the potential of Google’s vast information resources for automated marketing content extraction hinges on the right tools and technologies. These tools act as the bridges between the raw data available on Google and the actionable marketing insights you need. Choosing the appropriate tool depends heavily on your specific needs, budget, and technical expertise. From simple web scraping scripts to sophisticated AI-powered platforms, a wide array of options exists to streamline the process.The availability of various tools and technologies empowers businesses to tailor their content extraction strategies.

By leveraging these resources, companies can efficiently gather, analyze, and utilize marketing insights from diverse sources, leading to more effective campaigns and improved decision-making.

Open-Source Libraries

Open-source libraries are powerful tools for automating content extraction from Google and other web sources. They provide a flexible and cost-effective solution for developers. Their collaborative nature means they often benefit from continuous improvement and a large community for support.

- Beautiful Soup: A Python library for parsing HTML and XML documents. It provides methods to navigate and extract specific elements from web pages, enabling the extraction of data like product descriptions, reviews, and competitor analysis information. It’s highly versatile for different types of data formats found on Google Search results and other web pages.

- Scrapy: A powerful Python framework designed specifically for web scraping. It offers a structured approach to crawling websites, extracting data, and storing it in a structured format. Scrapy excels in handling complex websites with deep structures, making it suitable for large-scale content extraction projects.

- Selenium: A Python library that allows programmatic interaction with web browsers. This is particularly useful for websites that require JavaScript rendering or dynamic content updates, as Selenium can simulate user actions like clicking buttons or filling forms. It allows for the extraction of content that’s not directly available through HTML parsing.

Commercial Software

Commercial software packages often provide a more comprehensive and user-friendly experience for content extraction. These tools often include advanced features like data cleaning, transformation, and analysis capabilities.

- Outbrain: A platform for marketers focused on content discovery and promotion. It provides tools for content creation, optimization, and distribution, enabling marketers to reach wider audiences and drive more engagement. It may not be strictly for content

-extraction* from Google, but its capabilities related to content management and discovery could be beneficial to those using extracted content. - Mention: A social media monitoring tool that tracks brand mentions, industry conversations, and competitor activities. While not solely for content extraction, it can surface valuable data related to marketing conversations and trends, helping to understand the public perception of a product or service.

- Google Search Console: Although part of the Google ecosystem, Search Console can be used for understanding how Google indexes and ranks your content. This data can help identify areas for improvement and guide your marketing strategy. This isn’t for content

-extraction*, but it’s a valuable tool for understanding the context of the extracted content.

Platform Considerations

The platform you choose for using these tools significantly impacts your workflow and the type of content you can extract.

- Cloud-based platforms: Cloud-based services provide scalable infrastructure for running large-scale extraction projects, potentially handling massive volumes of data. They are generally more flexible and adaptable to varying needs. They often offer advanced analytics and storage capabilities.

- Local installations: Local installations allow for greater control over the extraction process. However, they may require more technical expertise and resources for maintenance and scalability.

Tools Summary

| Tool | Specific Capabilities |

|---|---|

| Beautiful Soup | HTML/XML parsing, element extraction |

| Scrapy | Web scraping framework, structured data extraction |

| Selenium | Dynamic content extraction, simulating user actions |

| Outbrain | Content discovery, promotion, and optimization |

| Mention | Social media monitoring, brand mentions |

| Google Search Console | Website indexing and ranking analysis |

Data Handling and Storage

Managing extracted marketing content effectively hinges on robust data handling and storage strategies. This involves careful consideration of how to organize, clean, and store the information for future analysis and use. Poor data management can lead to wasted resources and inaccurate insights. A well-structured system, on the other hand, allows for easy access, analysis, and reporting, driving better marketing decisions.Efficiently storing and processing extracted data is crucial for leveraging its value.

This involves not just saving the content but also ensuring it’s organized in a way that allows for easy retrieval, manipulation, and integration with other data sources. This optimized approach unlocks the true potential of the extracted content.

Methods for Handling Extracted Content

Various methods exist for handling extracted content, each with its strengths and weaknesses. Choosing the right approach depends on the specific needs of the project and the volume of data involved. Raw data extraction often requires cleaning and preprocessing to ensure quality and consistency.

- Data Validation: Ensuring the accuracy and consistency of extracted data is paramount. This involves checking for missing values, inconsistencies in formats, and errors in the data itself. For instance, a website might have inconsistent product descriptions or pricing information. Validating this data prevents downstream errors in analysis.

- Data Transformation: Sometimes, extracted data needs to be converted into a different format or structure. This might involve changing the data type (e.g., converting a string to a number) or restructuring the data (e.g., merging multiple data sources). For example, converting unstructured data like social media posts into structured formats for analysis.

- Data Filtering: Removing irrelevant or unwanted data is essential. This might involve filtering out specific s, removing duplicate entries, or excluding data from certain time periods. Imagine filtering out irrelevant customer feedback from a large dataset to focus on specific product issues.

Data Storage Solutions

Choosing the right storage solution is vital for scalability and efficiency. Various options exist, ranging from simple spreadsheets to complex database systems. Consider factors like data volume, complexity, and future needs.

- Databases: Relational databases (like MySQL, PostgreSQL) and NoSQL databases (like MongoDB, Cassandra) offer structured and scalable storage for large datasets. They are suitable for complex queries and relationships between different data points. Consider a database for managing customer profiles and their interactions with marketing materials.

- Cloud Storage: Cloud services (like AWS S3, Google Cloud Storage) offer scalable storage solutions for massive datasets. These are beneficial for handling large volumes of extracted data and enabling easy access and sharing. Cloud storage is ideal for archiving large volumes of social media posts.

- Spreadsheets: For smaller datasets or initial explorations, spreadsheets (like Google Sheets, Microsoft Excel) can be sufficient. They are user-friendly and provide basic data manipulation capabilities. They are great for quickly analyzing and visualizing smaller datasets of marketing campaign results.

Organizing and Structuring Extracted Data, Google automatic marketing content extraction

A well-structured dataset facilitates efficient analysis and retrieval. A clear schema defines the relationships between different data points and provides a standardized way to organize the extracted content.

- Schema Design: A clear schema defines the structure of the data, including fields, data types, and relationships between different data points. For example, a schema for customer data might include fields for name, email address, purchase history, and demographics.

- Data Modeling: Creating a data model maps out the relationships between different data entities. This visual representation helps understand how the data points interact and supports efficient data querying. A data model for e-commerce might link customer profiles to their order history and product information.

- Data Mapping: Mapping extracted data to a pre-defined schema ensures consistency and accuracy. For example, mapping social media post data to a structured format for analysis.

Data Cleaning and Pre-processing Techniques

Data cleaning and preprocessing are essential steps to improve data quality and consistency. These steps address issues like missing values, inconsistent formats, and errors in the data.

- Handling Missing Values: Strategies for handling missing values include imputation (filling in missing values with estimated values), deletion (removing rows or columns with missing values), or using specific techniques for handling missing data. Missing data in customer surveys could be handled by imputation with the average value or by removing the incomplete surveys.

- Format Standardization: Ensuring data consistency involves converting different formats into a standard format. This might include converting dates, currencies, or other formats into a unified structure. Inconsistent pricing formats from different websites need to be standardized to facilitate comparison.

- Data Deduplication: Removing duplicate entries from the dataset prevents errors and improves efficiency. Deduplication techniques include comparing data fields and removing identical entries. Deduplicating customer profiles to avoid double counting in marketing analysis is essential.

Managing and Storing Extracted Content

Effective management of extracted content involves strategies for long-term storage, retrieval, and access. This ensures that the data remains usable for future analysis and reporting.

- Version Control: Keeping track of changes to the extracted content over time is important. Version control systems can record changes and allow for rollback to previous versions if needed. Version control helps in maintaining a history of the marketing content extraction process.

- Data Backup and Recovery: Creating regular backups of the data ensures that the information is protected from loss due to technical issues or other problems. Data backup and recovery are essential for ensuring data integrity.

- Data Access Control: Controlling access to the extracted content ensures that only authorized users can view or modify the data. This helps in maintaining data security and preventing unauthorized access to sensitive information. Access control is vital for sensitive marketing data.

Outcome Summary

In conclusion, Google Automatic Marketing Content Extraction provides a valuable tool for modern marketing. By understanding the extraction methods, potential applications, and associated challenges, businesses can leverage this technology to enhance their content creation, personalization efforts, and market research strategies. This comprehensive approach emphasizes the importance of responsible data handling and ethical considerations, ensuring that the extracted content is used effectively and legally.