Google on fixing discovered currently not indexed. Understanding why your website pages aren’t showing up in Google search results is crucial. This comprehensive guide delves into the reasons behind “currently not indexed” warnings, providing actionable steps to troubleshoot and resolve the issue, from identifying the problem to implementing effective solutions.

This in-depth exploration covers everything from common technical errors to content optimization strategies, empowering you to reclaim your website’s visibility. We’ll guide you through the process of diagnosing, fixing, and preventing future indexing problems, ultimately maximizing your website’s reach on Google.

Understanding the Issue

A webpage flagged as “currently not indexed” by Google means that the search engine has not yet added it to its database of searchable content. This prevents the page from appearing in search results, significantly impacting its visibility and potential for organic traffic. Understanding the reasons behind this status is crucial for website owners to identify and rectify potential problems.Indexing is fundamental to a website’s online presence.

Google’s index acts as a vast library, containing trillions of webpages. When a page is indexed, it’s added to this library, allowing Google to understand its content and rank it in search results based on relevance to user queries. Without indexing, a website effectively disappears from Google’s radar.

Potential Reasons for Non-Indexing

Several factors can contribute to a webpage being marked as “currently not indexed.” Technical issues are a frequent cause, including server errors, crawling problems, and broken links. Content quality, while not always a direct cause, can also influence indexing. A lack of relevant s, duplicate content, or a poor user experience can make a page less attractive to Google’s crawlers.

Changes in Google’s algorithms can also temporarily impact indexing, as Google constantly refines its methods for determining which pages are most valuable to users.

Technical Issues Affecting Indexing

Technical problems often lie at the heart of indexing issues. Server downtime or misconfigurations can prevent Google’s crawlers from accessing the page. Slow loading times or complex page structures can also hinder crawling. Broken links within the website, leading to dead ends, can cause Google’s crawlers to lose their way. This prevents them from fully exploring and indexing the entire site.

Content Quality and Indexing

Content quality plays a vital role in indexing. If a webpage lacks relevant s or offers thin, low-quality content, Google may not consider it valuable. Duplicate content, where substantial portions of the page’s text are identical to other webpages, can lead to non-indexing. A poor user experience, including a confusing layout, difficult navigation, or slow loading speed, can also signal to Google that the page is not suitable for users.

Google Algorithm Updates and Their Impact

Google’s search algorithms are constantly evolving, and updates can sometimes impact a website’s indexing. Changes in how Google interprets signals like backlinks, user engagement, and mobile-friendliness can lead to fluctuations in search visibility. While these updates aim to improve search results for users, they can occasionally result in temporary indexing issues for some websites. Keeping abreast of these updates and adjusting your website’s approach accordingly is important.

Table of Indexing Issues

| Category | Reason | Example | Impact |

|---|---|---|---|

| Technical Errors | Server errors, broken links, slow loading times | A website experiencing frequent server outages | Page not accessible to crawlers, low visibility |

| Content Quality | Lack of relevant s, thin content, duplicate content | A webpage with little unique content | Low ranking in search results |

| Google Algorithm Updates | Changes in how Google evaluates pages | Updates focusing on mobile-friendliness | Temporary drops in search visibility |

Identifying the Problem

Pinpointing why a webpage isn’t showing up in search results requires a systematic approach. Simply checking if a page is indexed isn’t enough; you need to understandwhy* it’s not being indexed. This involves investigating various potential issues and differentiating them from other website problems.A comprehensive approach involves analyzing different factors that might be preventing a page from appearing in search results.

This goes beyond just confirming indexing status and delves into the underlying reasons.

Potential Indicators of Non-Indexing

Understanding the symptoms is crucial for diagnosing the problem. Various indicators signal that a page might not be indexed.

- Missing from search results: The most obvious sign. If a page you expect to be visible isn’t appearing in Google search results for relevant queries, it’s a clear indication of a potential indexing issue.

- Zero impressions in Google Search Console: If a page receives zero impressions in Google Search Console, this indicates it isn’t being shown in search results.

- Low or no click-through rate (CTR) from search results: If the page is showing in search results but has very few clicks, it might indicate a problem with the snippet or meta description, which can affect click-through rate and, indirectly, indexing.

- Crawling errors in Google Search Console: Errors during the crawling process can prevent Google from indexing the page. These errors are typically reported in Google Search Console.

- Changes to the site structure or content: Major changes to the site’s structure or significant alterations to content can temporarily disrupt indexing. For example, if you migrate a site to a new domain or drastically change the website structure, indexing may be temporarily suspended.

Identifying Specific Non-Indexed Pages

There are several ways to identify pages that aren’t being indexed.

- Google Search Console: Use the “URL Inspection” tool in Google Search Console. Entering a URL allows you to check its indexing status. This tool can reveal whether the page is indexed, not indexed, or being processed. It can also reveal crawl errors, providing clues about why a page isn’t being indexed.

- Sitemaps: Review the sitemap to ensure that the pages you expect to be indexed are listed there. If a page is missing from the sitemap, it may not be discoverable by Google’s crawlers. Regular updates to the sitemap are important to reflect any changes to the site’s structure or content.

- Indexing Tools: Specialized indexing tools can help pinpoint non-indexed pages and provide detailed reports on indexing status. These tools can often be integrated with Google Search Console.

- Manual Search: Perform a manual search on Google for the specific page. If it doesn’t appear, there’s a good chance it’s not indexed. This is a straightforward, basic method, but it doesn’t provide the detailed information offered by more specialized tools.

Differentiating Indexing Issues from Other Website Problems

Distinguishing indexing problems from other website issues is critical.

- Server Errors (e.g., 404, 500): These errors prevent Google from accessing the page. These are different from indexing issues, which refer to whether the page is in Google’s index.

- Content Issues: Poorly written content or a lack of relevant s can negatively impact a page’s visibility, but this isn’t an indexing problem in itself. Content quality issues should be addressed separately.

- Technical Issues: Problems with the website’s architecture, such as broken links or slow loading speeds, can hinder Google’s ability to crawl and index. These issues should be resolved separately from indexing problems.

Distinguishing Indexing Problems from Crawl Errors

Crawl errors can be a significant factor affecting indexing.

- Crawl errors versus indexing issues: Crawl errors prevent Google from accessing the page, while indexing issues relate to the page’s presence in the index. Addressing crawl errors is essential for ensuring the page is even considered for indexing.

- Identifying Crawl Errors: Google Search Console provides detailed reports on crawl errors, helping you pinpoint the specific reasons why Google can’t access the page. Common errors include 404 Not Found, 500 Internal Server Error, and robots.txt issues.

Comparison of Indexing Status Codes

Understanding different HTTP status codes is vital for troubleshooting.

Google’s recent announcement about fixing discovered content currently not indexed is a big deal. It’s a clear sign that search engines are evolving, and understanding how AI is changing search is crucial. Learning how to adapt your SEO strategies to this new AI-powered search landscape is vital to staying visible in search results. Check out this article on how AI powered search is reshaping SEO and what to do about it how ai powered search is reshaping seo and what to do about it for a deeper dive.

Ultimately, this means focusing on high-quality, user-centric content is more important than ever to get your content indexed correctly.

| Status Code | Description | Impact on Indexing |

|---|---|---|

| 404 Not Found | The requested page doesn’t exist. | Prevents indexing; Google won’t be able to find the page. |

| 500 Internal Server Error | A server-side error occurred. | Can prevent indexing; Google might encounter issues accessing the page. |

| 429 Too Many Requests | Too many requests made to the server. | Can cause indexing delays or prevent access; the server might temporarily block requests. |

| 503 Service Unavailable | The server is temporarily unavailable. | Prevents indexing; Google won’t be able to access the page during the outage. |

Troubleshooting Strategies

Once you’ve identified potential indexing issues and understood the root cause, it’s time to implement troubleshooting strategies. This involves systematically checking various aspects of your website’s structure and configuration to ensure Google can properly crawl and index your content. A well-defined troubleshooting process can significantly reduce the time needed to resolve indexing problems and get your content back in Google’s index.Addressing technical issues impacting indexing requires a proactive approach.

This includes regular checks for errors in your website’s configuration, as well as continuous monitoring for changes that might disrupt the indexing process. The effectiveness of your troubleshooting will be directly proportional to the thoroughness of your investigation and the precision of your solutions.

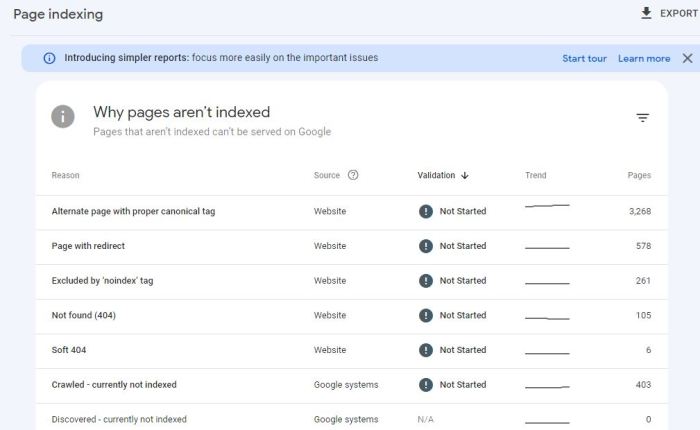

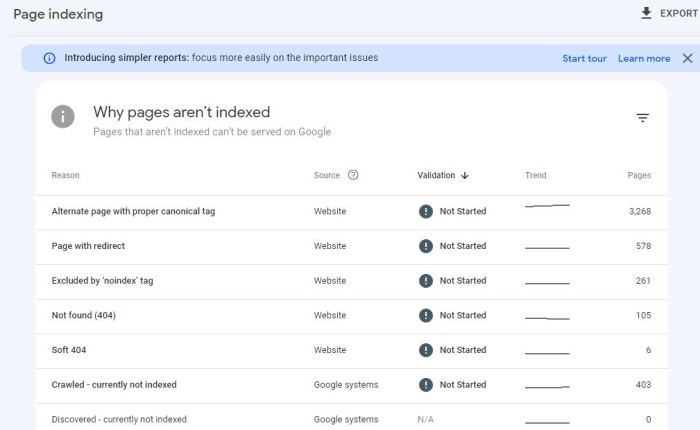

Checking Page Submission to Google Search Console

Verifying your website’s pages are properly submitted to Google Search Console is crucial for indexing. Ensure all relevant pages are listed in your Search Console property. A simple check of the “Index Coverage” report in Search Console can reveal pages that are not being crawled or indexed. This report provides valuable insights into the status of your website’s indexation.

Identifying and Resolving Technical Issues Impacting Indexing

Technical issues can significantly hinder indexing. These problems range from server errors to issues with robots.txt files or XML sitemaps. Careful analysis of your website’s technical infrastructure is essential for finding and fixing these issues. Identifying these issues early is crucial to preventing further indexing problems.

Debugging Robots.txt Files

The robots.txt file dictates which parts of your website Googlebot and other search engine crawlers are allowed to access. Incorrect configurations can prevent Google from crawling essential pages. A thorough review of the robots.txt file is essential to ensure it’s correctly configured.

- Verify that the file is accessible at your website’s root directory (e.g., yourwebsite.com/robots.txt). If not, the crawler will not be able to access it.

- Carefully review the directives within the robots.txt file. Ensure that essential pages and directories are not blocked by directives like Disallow: /. Common errors include incorrect syntax or missing directives.

- Test the robots.txt file using a dedicated testing tool. These tools will simulate how Googlebot interacts with your robots.txt, helping you identify potential errors.

- Use a sitemap or an alternative way to signal that certain files are important if there is a need to exclude some from indexing.

Ensuring Proper XML Sitemaps Submission and Maintenance

XML sitemaps provide a structured list of your website’s pages, helping search engine crawlers understand your website’s architecture. Maintaining up-to-date sitemaps is crucial for effective indexing.

- Ensure your XML sitemap is correctly structured and contains essential URLs. Verify that all important pages are included and that the structure conforms to the XML sitemap protocol.

- Submit your sitemap to Google Search Console. This is a crucial step to inform Google about your website’s structure.

- Regularly update your XML sitemap to reflect any changes to your website. This is important to avoid indexing errors, especially when you add new pages or modify existing ones.

- Monitor the sitemap’s performance in Search Console. The sitemap report in Search Console provides insights into which pages have been successfully crawled.

Potential Technical Issues Preventing Indexing and Solutions

The following table Artikels common technical issues that can prevent indexing and their corresponding solutions.

| Technical Issue | Solution |

|---|---|

| Incorrect robots.txt configuration | Review and update the robots.txt file to allow access to essential pages. Use testing tools to identify issues. |

| Broken or missing links | Identify and fix broken internal and external links using tools like Screaming Frog. |

| Server errors (e.g., 404, 500) | Troubleshoot and resolve server-side issues to ensure proper page delivery. |

| Issues with redirects | Ensure proper redirect configurations are in place. Use tools to verify and validate redirects. |

| Incorrect file permissions | Adjust file permissions to allow Googlebot access to necessary files. |

| Issues with canonicalization | Correctly implement canonical tags to prevent duplicate content issues. |

| Problems with JavaScript or CSS | Ensure your JavaScript and CSS are optimized and not blocking Googlebot from rendering pages. |

Monitoring and Evaluation: Google On Fixing Discovered Currently Not Indexed

Once you’ve identified and addressed indexing issues, the next crucial step is monitoring the effectiveness of your efforts. Continuous tracking ensures that your website remains discoverable by Google and that any new changes or issues are caught early. This proactive approach helps maintain a healthy flow of organic traffic and prevents potential problems from escalating.Regular monitoring involves checking the status of your pages in Google Search Console and analyzing the impact of implemented changes.

By consistently evaluating your website’s performance in the search results, you can identify areas for improvement and refine your strategies for optimal indexing.

Monitoring Indexing Status of Specific Pages

Regularly checking the indexing status of specific pages in Google Search Console is vital. This allows you to identify issues immediately, such as pages that aren’t being indexed or have been de-indexed. The “Indexing” report in Search Console provides detailed information on the indexing status of your site.

Significance of Regular Google Search Console Checks, Google on fixing discovered currently not indexed

Consistent monitoring of Google Search Console is critical for identifying and resolving indexing issues promptly. Problems like crawling errors, blocked resources, or incorrect robots.txt directives can all be detected and addressed swiftly through regular checks. Proactive monitoring allows for timely intervention, minimizing the negative impact on your website’s visibility.

Strategies for Tracking the Impact of Implemented Changes

Tracking the impact of changes on indexing involves careful analysis of data in Google Search Console. Compare data before and after implementing changes to assess the effectiveness of your strategies. For example, if you updated your sitemap, compare the indexing rate of new pages to the previous indexing rate. Analyze changes in crawl rates, indexed pages, and other relevant metrics to gauge the impact.

Interpreting Data from Google Search Console Regarding Indexing

Data from Google Search Console provides valuable insights into indexing performance. For example, if the “crawl errors” report shows a significant increase, it could indicate a technical issue with your site, such as broken links or server errors. A decline in indexed pages might suggest a problem with your sitemap or other indexing signals. Analyzing the specific nature of the errors or declines helps you pinpoint the root cause and implement appropriate solutions.

Key Metrics from Search Console Relevant to Indexing

Understanding the metrics relevant to indexing is crucial for effective monitoring. The following table highlights key metrics found in Google Search Console that offer insights into your website’s indexing performance.

| Metric | Description | Relevance to Indexing |

|---|---|---|

| Crawl Rate | The frequency at which Googlebot crawls your site. | High crawl rate indicates Googlebot can access your site effectively. Low crawl rate could suggest issues with server response time or crawl errors. |

| Indexed Pages | The number of pages Google has indexed. | A decrease in indexed pages could indicate a problem with sitemaps, changes in robots.txt, or other indexing issues. |

| Crawl Errors | Errors encountered by Googlebot during crawling. | High crawl errors often signal technical issues on your site that need to be fixed. Examples include 404 errors, server errors, or blocked resources. |

| Sitemap Coverage | The percentage of sitemap URLs successfully processed by Google. | Low sitemap coverage indicates issues with your sitemap file, leading to potential indexing problems. |

| Fetch as Google | Allows you to simulate how Googlebot sees your page. | Useful for diagnosing indexing problems on specific pages. Provides detailed information about crawling errors and issues. |

Addressing Specific Errors

Troubleshooting indexing issues often involves digging into the specifics of crawl errors, server responses, and content quality. This stage requires meticulous attention to detail and a systematic approach to identify and fix the root causes of indexing problems. By understanding the nature of these errors and implementing appropriate solutions, website owners can significantly improve their site’s visibility in search engine results.The specific errors encountered during the indexing process can be categorized into several groups, each requiring distinct troubleshooting strategies.

Properly addressing these issues is crucial for maintaining a healthy and discoverable website. By focusing on both technical aspects like server responses and content quality like duplicate content, we can maximize the efficiency of search engine crawlers.

Common Crawl Errors and Their Solutions

Crawl errors provide valuable insights into the technical aspects of a website that prevent search engine crawlers from successfully accessing and processing its content. Understanding these errors and their corresponding solutions is essential for effective troubleshooting. A common cause of crawl errors is server-side issues, which can be further divided into various types.

- HTTP Error 404 (Not Found): This error indicates that the requested page or resource does not exist on the server. Solutions include verifying the URL structure, checking for typos, and ensuring the page or file has been correctly uploaded or moved to the correct directory. This also encompasses ensuring proper redirects are in place if the content has been moved or deleted.

Google’s on the case for fixing those pages currently not indexed, which is great news! But, optimizing for search visibility often involves A/B testing different elements, like headlines or calls-to-action. Knowing how long to run an A/B test is crucial for making data-driven decisions; check out this guide to learn more how long to run an ab test.

Ultimately, a well-timed and well-executed A/B test can boost your site’s visibility, which directly supports Google’s indexing efforts, ensuring everything runs smoothly in the long run.

- HTTP Error 5xx (Server Errors): These errors indicate issues with the server’s response to a crawl request. Common examples include 500 Internal Server Error, 502 Bad Gateway, and 504 Gateway Timeout. Addressing these errors often requires investigating server logs for detailed information about the cause of the error and taking appropriate measures to resolve the underlying server-side problems. This may include checking server resources, optimizing code, and resolving database issues.

- Robots.txt Issues: The robots.txt file dictates which parts of your site crawlers are allowed to access. Incorrectly configured or incomplete robots.txt files can prevent crawlers from accessing crucial parts of your site. Verify that the robots.txt file is properly formatted and allows access to all pages that should be indexed.

Significance of Handling Server-Side Errors for Indexing

Server-side errors significantly impact indexing because they prevent search engine crawlers from accessing or processing your content. If a crawler encounters a persistent series of errors, it might flag your website as unreliable or even penalize it, leading to lower rankings or complete exclusion from search results. For instance, a website with numerous 404 errors might be perceived as having poor site maintenance, leading to reduced crawl frequency.

Google’s been working hard on fixing websites that are currently not showing up in search results. A crucial part of that is understanding user interactions, like clicks on specific elements. This often involves using Google Tag Manager to implement custom HTML tags on click listeners, as detailed in this helpful guide: google tag manager how to implement custom html tags on click listeners.

By tracking these interactions, Google can better understand the content and structure of your site, ultimately leading to improved indexing and visibility.

Fixing HTTP Errors Impacting Indexing

HTTP errors, especially 404s and 5xx errors, are critical to address for successful indexing. These errors prevent search engine crawlers from accessing or processing your content. Fixing these errors requires a multi-faceted approach, often involving analyzing server logs to pinpoint the source of the problem.

- Fixing Broken Links: Identify and fix broken links across your website, ensuring all internal and external links are valid and functional.

- Implementing Appropriate Redirects: If a page has been moved, implement 301 redirects to maintain the link equity and ensure that search engines are aware of the new location.

- Optimizing Server Performance: Optimize your server resources and configurations to prevent timeouts and other server-side issues that lead to HTTP errors.

Duplicate Content Solutions

Duplicate content negatively impacts indexing as search engines struggle to determine which version of the content to prioritize. Ensuring unique content across your site is essential for search engine optimization.

- Canonicalization: Use the tag to specify the preferred version of a page to search engines, especially for duplicate content from different URLs.

- Content Differentiation: Ensure that content is unique and not simply copied from other sources. Originality and unique perspectives are essential for attracting and maintaining user engagement.

- Handling Dynamic Content: For dynamic content, use parameters or other techniques to generate unique URLs for each piece of content.

Resolving Mobile-Friendliness and Indexing

Mobile-friendliness is crucial for indexing, as Google prioritizes mobile-friendly sites. Ensuring your website is responsive and displays correctly across various devices is essential for optimal indexing.

- Responsive Design: Implement responsive web design to ensure your website adapts to different screen sizes and devices.

- Mobile-First Indexing: Google indexes your website’s mobile version first, so ensure the mobile site is functional and error-free.

- Mobile Sitemaps: Include mobile sitemaps to guide crawlers through your mobile site’s structure.

Preventing Future Issues

Ensuring your website remains discoverable by search engines requires proactive measures. This involves more than just initial optimization; ongoing maintenance is crucial. Ignoring these preventative steps can lead to recurring indexing problems, wasting time and resources. A well-maintained website is a consistently discoverable website.A website’s discoverability by search engines is not a one-time task. It requires ongoing attention to ensure its continued presence in search results.

Regular maintenance and proactive strategies are vital for maintaining good indexing and avoiding future problems. This includes keeping your content fresh, your website structure clean, and your security protocols up-to-date.

Maintaining an Easily Indexable Website

Maintaining a website’s ease of indexing requires a multi-faceted approach. This includes ensuring the website is technically sound and accessible to search engine crawlers.

- Regular Content Updates: Fresh, updated content signals to search engines that your website is active and relevant. This keeps search engines interested in your site and encourages more frequent crawling. Regularly updating blog posts, news articles, product descriptions, or other content demonstrates your site’s relevance and engagement.

- Optimized Website Structure: A well-organized website structure helps search engine crawlers navigate your site effectively. Use clear hierarchies, descriptive URLs, and internal linking to guide crawlers through your content. A logical and intuitive sitemap will improve crawling and indexing efficiency.

- Clean and Well-Maintained Code: Clean, well-structured code makes it easier for search engine crawlers to understand and index your website’s content. Avoid excessive use of unnecessary tags, scripts, or plugins, which can confuse or slow down crawlers. Employing a clean, organized code structure improves indexing.

Importance of Regular Content Updates

Regular content updates are vital for maintaining a website’s presence in search engine results. Search engines favor websites that are actively maintained and updated.

- Relevance and Freshness: Updating content with new information ensures your site remains relevant to search queries. This signals to search engines that your website is active and provides current information. A website that doesn’t update can appear outdated and lose its ranking.

- Improved User Experience: Fresh content often leads to a better user experience. Users are more likely to find relevant and updated information on your site, improving engagement and dwell time. This ultimately contributes to a positive user experience.

- Search Engine Crawling: Search engine crawlers frequently revisit sites with updated content, helping them discover and index new or changed information. More frequent crawling results in faster indexing.

Optimizing Website Structure and Code for Indexing

Optimizing your website’s structure and code for indexing directly impacts search engine discoverability.

- Logical Hierarchy: Use a logical and well-defined hierarchy to organize your content. This helps search engine crawlers understand the relationship between different pages on your site. Clear categorization improves indexing and user experience.

- Descriptive URLs: Use descriptive and -rich URLs to help search engines understand the content of each page. Descriptive URLs make it easier for search engines to categorize and index pages.

- Internal Linking: Internal links help crawlers discover different parts of your website. Use relevant anchor text to guide crawlers through your content. This aids in indexing by connecting related pages.

Importance of a Secure Website

A secure website is essential for both user trust and search engine indexing.

- Trust and Credibility: A secure website with an SSL certificate builds user trust. Users are more likely to interact with and engage with a site that prioritizes security. This directly influences the user experience and engagement.

- Google’s Ranking Factors: Security is a critical factor for search engines. A secure website is more likely to rank higher in search results, as it prioritizes user safety. Security signals directly affect ranking.

- Crawling and Indexing: Search engines may not index sites that are not secure, especially those dealing with sensitive data. Prioritizing security helps in maintaining the site’s visibility and ranking.

Best Practices for Avoiding Future Indexing Problems

Implementing best practices helps prevent future indexing issues.

- Regular Site Audits: Conduct regular site audits to identify potential indexing problems early. This proactive approach helps in resolving issues before they impact discoverability. Regular audits help maintain website health.

- Regular Crawl Testing: Use tools to simulate search engine crawls and identify any structural or code issues. This can help anticipate and address potential problems. This allows for early detection of indexing issues.

- Implementing Proper Robots.txt: A well-defined robots.txt file prevents search engine crawlers from accessing parts of your site that you don’t want indexed. This is crucial for managing indexing. A proper robots.txt file protects content and resources from unwanted indexing.

Conclusion

In conclusion, fixing a “currently not indexed” issue requires a multifaceted approach. By meticulously checking technical aspects, optimizing content quality, and regularly monitoring your website’s performance in Google Search Console, you can effectively address indexing problems and ensure your site is prominently displayed in search results. Consistent effort in these areas will significantly improve your website’s visibility and organic traffic.