Google retires rel next prev indexing, signaling a significant shift in how search engines handle pagination. This change could dramatically affect how websites are indexed, potentially altering rankings and user experiences for sites with extensive content. Understanding the implications is crucial for webmasters and professionals alike.

This in-depth look explores the impact on search results, technical adjustments for webmasters, alternative indexing methods, and the future of web design and development. We’ll also examine strategies and user experience considerations in the post-retirement era.

Impact on Search Results: Google Retires Rel Next Prev Indexing

Google’s retirement of the rel=next/prev indexing method signifies a significant shift in how search engines handle pagination and large websites. This change will likely lead to adjustments in how search results are presented and how websites are ranked, impacting both large and small sites. Understanding these potential shifts is crucial for website owners and professionals.The retirement of rel=next/prev indexing fundamentally alters the way Google interprets and indexes webpages, which directly affects search result pages.

This shift necessitates a reevaluation of strategies for presenting content across numerous pages, and consequently, affects the visibility of content within search engine results.

Potential Changes in SERPs

The removal of rel=next/prev signals a potential shift in how Google displays search results, specifically for sites with numerous pages. The algorithm may rely more heavily on other signals to determine the importance and relevance of each page, potentially affecting the order of results.

Impact on Website Visibility

The retirement of rel=next/prev will likely impact websites with extensive content, especially those using pagination to organize information. Without this structured indexing, websites may lose prominence in search results. Large e-commerce sites, news outlets with extensive archives, and blogs with numerous articles are particularly vulnerable to this change. Strategies to compensate for the loss of this indexing method need to be developed and implemented to maintain visibility.

Alteration of Ranking Algorithms

Google’s ranking algorithms are complex and constantly evolving. The removal of rel=next/prev may influence these algorithms in several ways. Google might prioritize signals like site structure, content quality, and user engagement. Furthermore, the algorithm may place a greater emphasis on content that directly addresses the user’s search query, regardless of its position on the website. For example, an e-commerce site might find that product pages that are directly related to user searches gain prominence, while less-relevant pages are demoted.

Google’s retirement of the rel next/prev indexing method is definitely a game-changer, but it’s not the only thing shaking up search. A recent study reveals that AI search engines are increasingly relying on third-party content, like the findings highlighted in this insightful article on ai search engines often cite third party content study finds. This shift, combined with Google’s changes, underscores the evolving landscape of search engine optimization and how relying on external sources is becoming a crucial factor for visibility.

Comparison with Other Indexing Techniques

Other indexing techniques, such as sitemaps and structured data, will become more critical in guiding Google’s understanding of a website’s structure and content. The use of schema markup to explicitly define the relationship between different pages within a website will become increasingly important for ensuring the algorithm comprehends the hierarchy of information. Websites that previously relied heavily on rel=next/prev to indicate pagination might need to supplement these techniques with more comprehensive structured data to provide the context Google needs.

Google’s retirement of the rel next/prev indexing method is a big deal for SEO. This change means site owners need to adapt their strategies, and finding skilled professionals like certified Salesforce consultants can be crucial for navigating these adjustments. These experts, such as certified salesforce consultants driving success with expertise , can help optimize your site structure and content for improved search engine rankings.

Ultimately, though, this change highlights the ever-evolving landscape of search engine optimization.

Effects on User Experience

The removal of rel=next/prev may have a minor impact on user experience when navigating through extensive websites. Users might need to manually navigate through more pages to discover the content they are looking for. To mitigate this, websites should prioritize a clear and intuitive site structure. Additionally, providing comprehensive search functionality within the website will empower users to quickly locate specific content.

Technical Implications for Webmasters

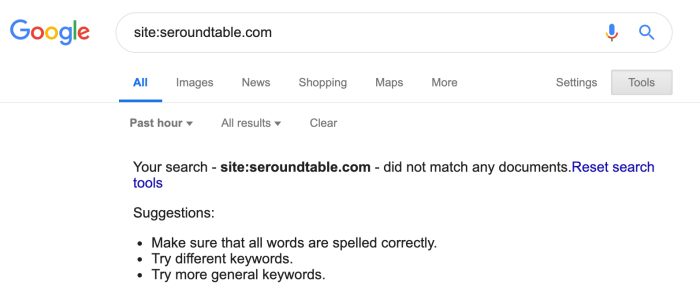

The retirement of the rel=”next” and rel=”prev” attributes marks a significant shift in how search engines, particularly Google, crawl and index websites. Webmasters must now adapt their strategies to ensure their sites continue to be effectively indexed and discoverable. This change requires a reevaluation of existing website architecture and content organization.The absence of rel=”next” and rel=”prev” signals a shift in how Google processes sitemaps.

No longer relying on these navigational cues, Google needs alternative methods to understand the relationship between different pages within a website. This necessitates a proactive approach from webmasters to ensure their content remains accessible and understandable to search engines.

Adjustments to Website Architecture

To compensate for the lack of rel=”next” and rel=”prev,” webmasters must carefully design their site architecture. Implementing a clear hierarchy and logical structure is crucial. Well-defined categories and internal linking strategies become essential in guiding users and search engine crawlers through the site. This will require webmasters to re-evaluate their internal linking strategies, prioritizing the importance of links and ensuring their relevance.

Strategies for Indexing Large Websites

Effective indexing of large websites after the retirement of rel=”next” and rel=”prev” requires a multi-faceted approach. Various strategies can be employed, each with its own set of advantages and disadvantages.

Google’s retirement of the rel next/prev indexing tags is a big deal for SEO. It means you need to rethink your site’s architecture, especially if you’re handling pagination. To make sure your WordPress site stays easily crawlable, consider automating updates to your site, like using a plugin for make wordpress site auto update. This will help ensure your site’s structure is kept up to date and search engines can easily navigate through your content, even without the rel next/prev tags.

It’s a good time to review and potentially adjust your website’s setup.

| Strategy | Description | Pros | Cons |

|---|---|---|---|

| Hierarchical Structure | Organize content into a clear, logical hierarchy with well-defined categories and subcategories. | Facilitates navigation for users and search engines. Improved crawlability. | Can be challenging to implement for complex or rapidly growing sites. |

| Breadcrumbs and Sitemaps | Implement breadcrumbs on each page to show the user’s current location within the site’s hierarchy. Use well-structured sitemaps. | Provides clear navigational cues for users and search engines. Helps maintain a logical structure. | Requires careful maintenance to keep breadcrumbs and sitemaps up-to-date. |

| Internal Linking Strategy | Develop a robust internal linking structure that connects relevant pages within the website. | Improves site crawlability and enhances user experience. | Requires a thorough understanding of website structure and content relationships. |

| Content Clustering | Group similar content together to create thematic clusters. | Helps users find related information and improves site authority on specific topics. | Can be complex to implement effectively, particularly for sites with broad content coverage. |

Sitemaps and Robots.txt Adjustments

Webmasters should review and potentially adjust their sitemaps and robots.txt files. A well-structured sitemap, updated regularly, is critical for guiding search engine crawlers through the website. The robots.txt file, too, must be meticulously reviewed to ensure that essential pages are accessible to crawlers. Careful consideration should be given to which parts of the website are allowed or disallowed from being indexed.

Impact on Structured Data Markup

Structured data markup, like schema.org, plays a vital role in helping search engines understand the context of web pages. While rel=”next” and rel=”prev” were used to signal pagination, alternative strategies should be employed to maintain the clarity and context of the structured data. Webmasters should carefully consider how their existing structured data markup can be adapted to address the changes.

Alternative Indexing Methods

Google’s retirement of the rel=”next” and rel=”prev” indexing method necessitates a shift in how webmasters handle pagination. This change forces a reevaluation of existing strategies and the exploration of alternative indexing methods to ensure proper search engine indexing and a seamless user experience. Webmasters must now proactively implement these new techniques to maintain their site’s visibility and ranking.Different pagination approaches offer varying degrees of effectiveness and complexity.

Understanding the nuances of each method is crucial for choosing the most suitable solution for specific website needs. This exploration will delve into various methods, their strengths and weaknesses, and practical implementation strategies.

Pagination Alternatives

Various methods exist for handling pagination, each with its own set of benefits and drawbacks. Understanding these alternatives allows webmasters to choose the most appropriate approach for their specific website architecture and user needs.

Implementing Alternative Pagination Methods

Effective implementation of alternative pagination methods involves careful consideration of the chosen approach. It requires a thorough understanding of how each method interacts with search engines and how to optimize the user experience.

Comparison of Pagination Solutions

The following table provides a comparative overview of various pagination methods, highlighting their strengths, weaknesses, and implementation considerations.

| Method | Description | Pros | Cons |

|---|---|---|---|

| Server-Side Rendering (SSR) | Dynamically generates HTML for each page on the server, allowing for complete control over content and . | Provides superior control over indexing, enabling detailed and accurate meta descriptions and sitemaps for each page. Crucially, it ensures the entire content is available to search engines. | Can be more complex to implement, especially for large datasets, requiring more server resources and potentially impacting loading times. |

| Client-Side Pagination (JavaScript) | Loads initial content and uses JavaScript to load additional pages as the user navigates. | Offers a smoother user experience by avoiding full page reloads, improving user engagement and interaction. | Can be challenging for search engines to crawl and index, potentially leading to incomplete or inaccurate indexing, particularly for those not using JavaScript. The effectiveness relies heavily on the implementation of appropriate JavaScript and site structure. |

| Infinite Scroll | Loads content as the user scrolls, simulating continuous content. | Provides a seamless user experience, mimicking the continuous flow of content and engaging users. | Can lead to issues in indexing if not handled correctly, potentially making it challenging for search engines to fully understand the pagination structure. Implementing proper structured data for each item is crucial for search engine recognition. |

| Pre-Rendering | Rendering content in advance to create static HTML pages for each page. | Search engines can easily crawl and index pre-rendered pages, resulting in accurate and complete indexing. | Requires significant initial setup and maintenance effort. The complexity increases with the amount of content. It’s less dynamic than other options. |

| API-based Pagination | Uses an Application Programming Interface (API) to fetch data for each page, enabling dynamic loading of content. | Enables the use of complex filtering and sorting options, providing a tailored experience for the user and search engine indexing. Offers advanced features. | Relies on the API, requiring robust API design and implementation, which can increase complexity and maintenance efforts. |

Example Implementation Strategies

Effective implementation of these methods requires tailoring the strategy to the specific website’s needs. Consider factors such as the size of the dataset, the desired user experience, and the technical capabilities of the development team. For instance, a news website might benefit from infinite scroll, while an e-commerce platform with a large product catalog might favor server-side rendering.

Future of Web Design and Development

The retirement of rel=next/prev indexing signals a significant shift in how search engines understand and index web pages. This change necessitates a reevaluation of current web design and development practices, prompting adjustments in how we structure websites and how search engines crawl and interpret the content. The implications extend beyond simple technical adjustments; they affect the very architecture of the web.The removal of rel=next/prev suggests a shift towards a more nuanced understanding of page relationships.

Instead of relying on sequential links, search engines might increasingly rely on contextual analysis and semantic understanding of content to establish the hierarchy and interconnectedness of information. This change necessitates a paradigm shift in web development, encouraging more robust and interconnected website structures.

Potential Adjustments to Web Development Frameworks and Libraries

This change demands a re-evaluation of current web development practices, with frameworks and libraries needing adaptation. The reliance on rel=next/prev for pagination will need to be replaced by more sophisticated methods of site structure.

| Framework/Library | Potential Adjustment | Description |

|---|---|---|

| React, Angular, Vue.js | Implement alternative pagination solutions | Developers will need to find ways to signal page hierarchy and relationships without relying on rel=next/prev. This could involve more explicit metadata or restructuring the data flow within the framework. For instance, using JavaScript to create and manage pagination through API calls, rather than relying on the rel tags. |

| WordPress, Drupal | Adapt theme and plugin structures | Content management systems (CMS) will require updates to their themes and plugins. Plugins currently using rel=next/prev for pagination will need to be modified to accommodate alternative solutions. A common solution will be to use JavaScript libraries and APIs to create dynamic pagination. |

| Next.js, Gatsby | Embrace server-side rendering (SSR) or static site generation (SSG) | These frameworks benefit from the potential of providing a richer, more complex page structure. Using SSR or SSG will enable developers to create static or server-rendered sites with robust page hierarchies that are independent of the dynamic pagination. |

| HTML, CSS, JavaScript | Focus on semantic HTML and structured data | While frameworks adapt, basic HTML and CSS will need to accommodate alternative page structures. Using semantic HTML5 tags, schema.org vocabulary, and microdata will become more critical for representing page relationships. This helps in providing context for search engines. |

Preparing for These Changes

Developers can prepare by understanding the shift away from sequential linking. Focus should be on creating content that is well-structured, informative, and contextually rich. Using a variety of best practices, including schema.org markup, will become crucial. By prioritizing clear semantic structure, developers can ensure their sites are understood by search engines, even without the rel=next/prev attribute.

Influence on Mobile-First Indexing

The retirement of rel=next/prev might accelerate the adoption of mobile-first indexing. By focusing on a consistent and logical page structure across all devices, developers can improve the user experience. This, in turn, will align more closely with the mobile-first approach. A clear, intuitive navigation structure across all devices will be critical to ensuring user satisfaction.

User Experience Considerations

The retirement of Google’s rel=next/prev indexing signals a shift in how search engines interpret website structure. This change necessitates a careful re-evaluation of user experience, focusing on how users navigate and interact with large websites. The loss of these navigational cues demands proactive measures to ensure a smooth and intuitive experience for site visitors.Understanding the user journey is paramount.

Users expect a clear and consistent path when exploring websites, and the absence of rel=next/prev could lead to disorientation and frustration, particularly on sites with extensive content. We need to analyze how these changes affect user behavior and design strategies to mitigate potential negative impacts.

Impact on User Navigation

The removal of rel=next/prev tags directly impacts how users traverse large websites. Previously, these tags provided clear visual cues and automated navigation tools to facilitate movement between pages. Without them, users might struggle to find their way around complex site architectures. This is especially true for users unfamiliar with the site’s internal structure or for those who rely on visual cues.

Implementing alternative navigation systems is crucial to maintain user flow.

Potential Improvements to the User Journey

While the removal of rel=next/prev presents challenges, it also creates opportunities for improved user experience. Websites can now focus on implementing more user-centric design strategies. This might include more prominent sitemaps, intuitive breadcrumbs, and improved search functionality. Clearer labeling of content sections, logical categorization, and a well-defined hierarchy can enhance user understanding and navigation.

Alternative Navigation Strategies

Websites can leverage various techniques to enhance navigation in the absence of rel=next/prev. Employing a robust sitemap, both visual and text-based, is essential for large websites. Breadcrumbs provide a visual trail that shows the user’s current location within the site’s hierarchy. Interactive elements, like collapsible sections and filtering tools, can streamline navigation for users exploring extensive content.

Implementing a powerful search function that allows users to quickly locate specific content becomes even more critical.

Maintaining User-Friendly Experience with Alternative Indexing Methods

Implementing alternative indexing methods should not compromise the user experience. A smooth transition requires a user-focused approach to design and implementation. A well-structured website, with logical categorization and clear labeling, remains essential. A focus on intuitive design principles will help maintain a positive user experience, even with the shift away from rel=next/prev. For example, a large e-commerce site might utilize a combination of sitemaps, filtered search, and categorized browsing to guide users through its extensive product catalog.

User-Centric Website Designs, Google retires rel next prev indexing

User-centric designs are vital for ensuring a positive experience, regardless of indexing methods. An example of a user-centric design for a blog is to incorporate an intuitive table of contents that allows users to quickly navigate to specific sections. Another example is to implement a dynamic search bar that adapts to user input, suggesting relevant articles as they type.

Such designs prioritize user needs and expectations, leading to a more satisfying experience.

Strategies Post-Retirement

The retirement of Google’s rel=”next” and rel=”prev” indexing signals necessitates a shift in strategies. Webmasters must now focus on creating a robust internal linking structure and optimizing content for discoverability beyond the traditional linear indexing approach. This means a deeper understanding of how Google’s algorithms assess and index content becomes paramount.

Adapting to the Change

The removal of rel=”next” and rel=”prev” signals means that Google will no longer rely on these tags to understand the sequential structure of websites. Consequently, website architecture must be reviewed to ensure clear internal linking that reflects the site’s hierarchical structure. Optimizing for a more comprehensive and nuanced indexing experience is key.

Optimizing Website Content

Effective content optimization now extends beyond simply incorporating s. A strategic focus on semantically rich content, well-defined headings, and comprehensive internal linking is essential. This approach fosters a deeper understanding of the site’s overall theme and individual page context for Google’s algorithms. Providing clear context through metadata, schema markup, and well-structured content is crucial.

Modifying Existing Content Strategies

Existing content strategies need a complete overhaul, emphasizing thorough internal linking and comprehensive site architecture. Content must be meticulously structured to guide users and crawlers through the site. This may involve reorganizing existing content or creating new content pieces to enhance the site’s topical coverage. Content should be structured to facilitate easy navigation and to create clear connections between different pages.

Improved Strategies

Improved strategies now prioritize creating a sitemap that accurately reflects the website’s structure and content. Employing robust internal linking structures is crucial, connecting related content effectively and guiding crawlers through the site. Content should be optimized to meet the needs of users and crawlers alike.

Crawlability and Indexability

Crawlability and indexability remain critical. Website speed, mobile-friendliness, and secure connections are fundamental aspects of site architecture. Technical , ensuring the site’s technical aspects align with Google’s search engine standards, is paramount. Effective sitemaps, structured data markup, and proper use of canonical tags remain essential.

Outcome Summary

Google’s retirement of rel next prev indexing forces a reevaluation of website structure and strategies. While presenting challenges, it also opens doors to innovative indexing approaches. Adapting to these changes is key for maintaining website visibility and providing a seamless user experience in the evolving search landscape. The future of web design and development is undoubtedly impacted.