Google Search Console how to fix blocked due to other 4xx issue is crucial for website health. A 4xx error, like a 404 Not Found, can block your content from Google’s search results. This guide delves into understanding these errors, troubleshooting their causes, and implementing preventative measures. We’ll cover everything from server logs to HTTP headers, helping you regain your website’s visibility.

This comprehensive guide will walk you through the steps to identify and resolve 4xx errors, ensuring your website remains visible and accessible to search engines. We’ll cover various 4xx error types, their causes, and solutions to restore your website’s visibility in Google Search Console.

Understanding the “Blocked due to other 4xx issue” Error

The “Blocked due to other 4xx issue” error in Google Search Console indicates that Googlebot, the search engine crawler, encountered problems accessing certain pages on your website. This isn’t a simple “broken link” error; it signifies a more complex issue that’s preventing Google from fully indexing your content. Understanding the root cause is crucial for restoring your website’s visibility and health.The 4xx error series, in the context of Search Console, represents client-side errors.

Frustrated with your Google Search Console showing a “blocked due to other 4xx issue”? Sometimes, a quick fix is all you need. Thinking outside the box, you might find some surprisingly effective solutions within a short timeframe. For example, check out these three ideas for commercial cleaning companies to boost their online presence in just 30 minutes 3 ideas for commercial cleaning companies 30 minutes.

These could be key to fixing your 4xx issues, as a more visible online presence could indicate technical fixes or improvements that Google is detecting. So, if you’re still struggling, revisit those solutions and double-check your site’s technical setup for a complete resolution.

These errors are generated by the web server, meaning the issue is often on the website’s end rather than Google’s. When Googlebot encounters a 4xx error, it interprets this as a temporary or permanent problem with the requested resource. A “blocked” status in Search Console, in this instance, means Google has encountered multiple such issues for specific pages.

Explanation of 4xx Errors

A 4xx error is a client-side HTTP status code signifying that there is a problem with the client’s request, not the server. This means the website itself has a problem with the requested resource, not Googlebot. These errors are often temporary and can be caused by various issues. Googlebot may receive numerous 4xx errors for specific URLs, which could lead to content being blocked.

Types of 4xx Errors

Various 4xx errors can trigger the “Blocked due to other 4xx issue” alert. The most common types include:

- 400 Bad Request: This error indicates that the request from Googlebot was malformed or could not be understood by the server. This might occur if there’s a syntax error in a URL or if the request headers are incorrect.

- 403 Forbidden: This signifies that the server is refusing access to the requested resource. This is often due to incorrect server configuration, access restrictions, or security measures.

- 404 Not Found: This classic error means the requested resource, such as a specific page or file, cannot be located on the server. This is a common issue, often caused by broken links or deleted content.

- 408 Request Timeout: This happens when the server doesn’t receive a request from Googlebot within a specific time frame. This is often a server-side configuration issue or network latency.

- 429 Too Many Requests: This error occurs when Googlebot’s requests exceed the server’s capacity. This can be a result of the server not being optimized for the volume of requests or if the server has rate limiting mechanisms in place.

Common Causes of 4xx Errors

Several reasons can contribute to 4xx errors, leading to content blockage in Google Search Console.

- Broken Links and Redirects: Incorrect or broken internal links, broken redirects, or outdated redirects can lead to 404 errors. If a page redirects to a non-existent URL, Googlebot will receive a 404 error, impacting indexing.

- Server Configuration Issues: Incorrect server configurations, including misconfigured web servers, can trigger various 4xx errors. Improper setup of Apache or Nginx configurations can lead to these issues.

- Temporary Server Downtime: If the server is temporarily down or experiencing outages, Googlebot may encounter 5xx errors, which are server-side errors, leading to content blockage.

- Caching Issues: Outdated or misconfigured caching mechanisms can result in serving incorrect content or redirecting to a non-existent page.

- Rate Limiting: If the website’s server has rate limiting in place, Googlebot’s requests might be blocked due to exceeding the limit.

Multiple 4xx Errors

A website might receive multiple 4xx errors for several reasons:

- Large-Scale Website Changes: Major updates or migrations to a new platform or server can introduce errors in the redirect chain or broken links across many pages.

- Malware or Hacking: Malware or hacking can corrupt website files and configurations, causing various 4xx errors.

- Content Management System (CMS) Issues: Problems with the CMS itself can cause a large number of 4xx errors.

- Poorly Maintained Website: A website that isn’t properly maintained and updated regularly is more likely to accumulate 4xx errors.

Importance of Resolving 4xx Errors

Addressing 4xx errors is crucial for maintaining a healthy website and preserving its visibility in search results. Resolving these errors allows Googlebot to crawl and index your website effectively, leading to better rankings and a positive user experience.

4xx Error Code Comparison

| Error Code | Description | Potential Implications |

|---|---|---|

| 400 Bad Request | Malformed or invalid request | Googlebot cannot process the request. |

| 403 Forbidden | Server denies access | Content is restricted or blocked. |

| 404 Not Found | Resource not found | Pages or files are missing. |

| 408 Request Timeout | Request exceeds server timeout | Server did not receive the request in time. |

| 429 Too Many Requests | Too many requests from the client | Server is overloaded. |

Troubleshooting the Issue

Facing a “Blocked due to other 4xx issue” in Google Search Console? This signifies that your server is returning a 4xx error code to Googlebot, preventing it from accessing and indexing certain pages. This guide provides a structured approach to diagnosing and resolving these problems, ultimately improving your website’s visibility.

Checking Server Logs for 4xx Errors

Server logs are invaluable for identifying the root cause of 4xx errors. These logs contain detailed information about requests and responses, offering crucial insights into the problem. Regularly reviewing these logs is essential for proactive website maintenance.

To pinpoint the specific URLs associated with 4xx errors, look for entries in your server logs that correspond to requests for those URLs. Crucially, pay close attention to the HTTP status codes. The presence of a 4xx code, such as 404, 403, or 400, indicates a potential problem that needs investigation.

Common Server Configuration Issues

Various server configurations can lead to 4xx errors. Identifying these configurations is a crucial step in troubleshooting.

| Configuration Issue | Description |

|---|---|

| Incorrect File Paths | Misspelled file names or incorrect directory structures can result in a 404 error. |

| Temporary File System Errors | Issues with temporary file storage, such as full disk space or permissions problems, can disrupt processes, leading to 4xx errors. |

| Server Overload | High traffic volume can cause server resources to become overwhelmed, leading to 4xx errors or slow responses. |

| Incorrect Permissions | Insufficient file permissions can prevent Googlebot from accessing necessary files, resulting in 4xx errors. |

| Misconfigured Web Server | Incorrect server configuration settings, like incorrect headers or missing modules, can cause 4xx errors. |

Problems with robots.txt

The robots.txt file dictates which parts of your website Googlebot is allowed to crawl. Issues with this file can block access to crucial content.

Ensure the robots.txt file is correctly configured to allow access to all pages you want indexed. Incorrectly disallowing a specific URL or directory can prevent Googlebot from crawling essential pages. Double-check the syntax and the content of the file to avoid unexpected blockage.

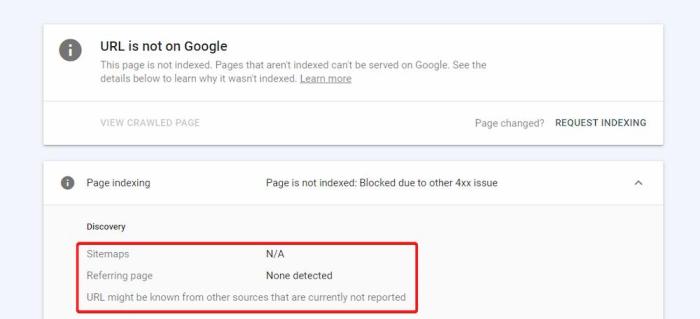

Identifying Affected URLs in Google Search Console, Google search console how to fix blocked due to other 4xx issue

Google Search Console provides a powerful toolset for identifying URLs experiencing 4xx errors. Utilize the “Fetch as Google” tool to simulate how Googlebot sees your site. This allows you to identify specific pages or URLs that Googlebot is unable to access due to 4xx errors.

The “Crawl Errors” report in Search Console highlights URLs with crawling issues, including those related to 4xx errors. Reviewing these errors can reveal the specific URLs affected by the problem, aiding in the troubleshooting process.

Figuring out why your Google Search Console is reporting a “blocked due to other 4xx issue” can be a real head-scratcher. While digging into the root cause, it’s fascinating to consider how the future of cloud storage might involve blockchain technology. The future of cloud storage is blockchain could potentially offer a more secure and transparent way to manage and access data, which might even impact how we troubleshoot these kinds of search console errors.

Ultimately, though, the key to fixing the 4xx block remains a thorough review of your site’s server configuration and content.

Internal Links and Broken Links

Broken internal links can prevent Googlebot from reaching crucial content. Thorough review of your website’s internal link structure is critical.

A thorough review of your website’s internal linking structure is crucial. Identify and fix any broken links pointing to pages that no longer exist. This ensures a seamless user experience and allows Googlebot to effectively crawl and index your content.

| Method | Description | Pros | Cons |

|---|---|---|---|

| Manual Inspection | Inspecting individual pages for broken links. | Detailed understanding of specific pages. | Time-consuming for large websites. |

| Link Checker Tools | Using software to automatically check for broken links. | Efficient for large websites. | May not detect all types of broken links. |

Redirect Chains and Loops

Redirect chains and loops can confuse Googlebot and lead to 4xx errors. It is crucial to identify and resolve these issues.

A redirect chain occurs when multiple redirects are applied sequentially to a URL. A redirect loop occurs when a series of redirects forms a cycle. Both can disrupt the crawling process. Use tools to analyze redirects and identify chains or loops that need fixing. This helps ensure that Googlebot can successfully navigate through your website’s structure.

Inspecting HTTP Responses with Developer Tools

Browser developer tools offer a way to examine the HTTP responses for 4xx errors on specific pages. This helps to pinpoint the exact cause of the issue.

Using developer tools, you can inspect the HTTP responses for specific URLs to identify the exact 4xx error codes. This allows for a more precise diagnosis and a targeted solution to resolve the issue.

Addressing Potential Issues: Google Search Console How To Fix Blocked Due To Other 4xx Issue

The “Blocked due to other 4xx issue” in Google Search Console signals a problem with your website’s server responses. These errors, which fall under the 4xx HTTP status code category, indicate that your site is sending incorrect or inappropriate responses to user requests. Understanding the root cause is crucial for effective remediation. Properly resolving these issues is essential for maintaining a healthy website and avoiding penalties from search engines.

Common 4xx Error Types and Solutions

Numerous 4xx errors can occur, each with its own specific cause and solution. Knowing which type of error you’re facing is critical to fixing it effectively.

| Error Type | Description | Possible Solution |

|---|---|---|

| 404 Not Found | The requested page cannot be found on the server. | Check for broken links, ensure correct URLs, and implement a 301 redirect for moved pages. |

| 403 Forbidden | The server understands the request but refuses to authorize it. | Verify server permissions, check for incorrect file access rules, and review your robots.txt file. |

| 400 Bad Request | The request was invalid or malformed. | Validate the request data sent to the server, review the request format, and check for incorrect parameters. |

| 429 Too Many Requests | The server is temporarily overloaded due to excessive requests. | Implement rate limiting on your server-side code, use appropriate caching mechanisms, and review your website’s traffic patterns. |

Resolving HTTP Header Issues

Incorrect HTTP headers can lead to various 4xx errors. Carefully reviewing your server’s configuration is crucial to identify and resolve header problems.

To fix HTTP header issues, check the response headers returned by your server for any errors. Use tools like browser developer tools or dedicated HTTP header checkers to inspect the headers. Ensure the correct headers (e.g., Content-Type, Cache-Control) are being sent. Ensure that the headers are correctly formatted and sent in response to requests.

Utilizing the Google Search Console URL Inspection Tool

The Google Search Console’s URL Inspection tool is a powerful diagnostic tool for identifying 4xx errors. This tool allows you to see the HTTP response codes, headers, and other details about a specific URL.

Using the URL Inspection tool, you can:

- Inspect the HTTP response code for the problematic URL.

- Review the server’s response headers for any inconsistencies.

- Identify the specific error type using the provided information.

Addressing Server Misconfigurations

Server misconfigurations can cause various 4xx errors. Correcting these configurations is essential for resolving the issue.

Verify your server’s configuration files (e.g., Apache or Nginx configuration files) to ensure that they are correctly configured. Look for any misspellings, incorrect settings, or missing directives. Test your configuration thoroughly after making any changes.

Managing Caching Mechanisms

Caching mechanisms can sometimes contribute to 4xx errors. Understanding and properly configuring caching is crucial for optimal performance.

Ensure that caching is configured correctly to prevent outdated or stale content from being served. Review your caching settings and ensure that they are consistent with your website’s needs.

Handling Temporary Server Downtime

Temporary server downtime can result in 4xx errors. Implementing proper monitoring and recovery procedures is vital.

Set up server monitoring tools to detect downtime quickly. Establish a robust recovery plan to restore service promptly. Use a CDN (Content Delivery Network) to improve availability and reduce the impact of downtime on user experience.

Resolving Incorrect Content Types

Incorrect content types can lead to 4xx errors. Understanding content types and configuring your server to handle them correctly is crucial.

Ensure that the Content-Type header accurately reflects the type of content being served. Different content types require different handling on the server-side. Review the content type specifications and ensure that the correct headers are being sent.

Troubleshooting a 4xx error in Google Search Console can be frustrating. Understanding Google’s evolving ranking algorithms is key to resolving these issues. For instance, Google’s recent patent on information gain for ranking web pages ( googles information gain patent for ranking web pages ) suggests a shift in how they evaluate content. Ultimately, addressing those 4xx errors requires a deep dive into your site’s content and structure, ensuring it aligns with Google’s evolving standards.

Useful Resources

- Google Search Console Help Center: Provides comprehensive information and troubleshooting guides.

- HTTP Status Codes: A detailed explanation of various HTTP status codes.

- Server Configuration Documentation: Specific documentation for your web server (Apache, Nginx, etc.).

Prevention Strategies

Preventing 4xx errors is crucial for maintaining a healthy and user-friendly website. These errors, often stemming from server-side issues or misconfigurations, can negatively impact user experience and search engine rankings. Proactive measures are key to avoiding these problems and ensuring a smooth online presence.Effective prevention hinges on a combination of proactive monitoring, regular maintenance, and meticulous configuration. By understanding potential pitfalls and implementing robust preventive strategies, you can minimize the risk of 4xx errors and maintain a high-performing website.

Proactive Monitoring Tools

Regular monitoring of website performance is essential for early detection of potential issues. Various tools provide insights into server health, response times, and resource utilization. This allows for timely intervention before problems escalate into 4xx errors.

| Tool | Features | Pros | Cons |

|---|---|---|---|

| Google PageSpeed Insights | Analyzes website performance and identifies areas for improvement. | Free, comprehensive analysis, identifies potential bottlenecks. | Limited insight into server-side issues, requires manual action. |

| Pingdom | Provides real-time monitoring of website performance, alerts on issues. | Real-time monitoring, customizable alerts, comprehensive reporting. | Paid service, requires setup. |

| Uptime Robot | Monitors website uptime and detects downtime. | Free tier, easy setup, simple monitoring. | Limited analysis beyond uptime, doesn’t directly identify 4xx issues. |

Regular Website Maintenance and Updates

Regular website maintenance and updates are vital for preventing 4xx errors. Outdated software, corrupted files, and security vulnerabilities can lead to server instability and errors. Consistent maintenance ensures the website functions optimally.

- Software Updates: Regularly updating server software and applications is crucial. Outdated software may have known vulnerabilities that can lead to errors. Stay updated to mitigate potential issues.

- File Integrity Checks: Periodically checking file integrity can reveal corrupted files that might cause server errors. This proactive approach helps maintain a stable environment.

- Security Audits: Regularly auditing website security is essential. Vulnerabilities can expose the website to attacks that lead to 4xx errors. Implementing strong security measures prevents these attacks.

Robots.txt File Management

The robots.txt file dictates which parts of your website search engine crawlers can access. Keeping this file up-to-date is important to prevent 4xx errors related to crawlers not being able to access specific resources.

- Regular Review: Regularly review and update the robots.txt file to reflect changes in your website structure. This prevents crawler errors that can manifest as 4xx issues.

- Error Handling: If a crawler encounters a 4xx error, the robots.txt file should explicitly state what parts of the site it should ignore to prevent further issues.

Implementing Error Handling Mechanisms

Implementing proper error handling mechanisms in web applications is crucial for preventing 4xx errors. This involves catching potential errors during processing and presenting user-friendly messages or taking alternative actions.

- Error Logging: Implement logging to track and identify errors that occur in the application. This allows you to identify the root cause of the error and fix it.

- Appropriate Responses: Ensure that the web application sends appropriate HTTP responses (e.g., 404 Not Found) to clients when encountering errors. This helps users understand the problem.

- Redirects: Use redirects (e.g., 301 or 302) to direct users to updated or corrected pages if necessary, avoiding 4xx errors.

Advanced Troubleshooting (Optional)

Diving deeper into 4xx errors often requires a more granular approach than the initial troubleshooting steps. This section provides advanced techniques for identifying and resolving intricate issues, especially those stemming from complex integrations or server-side configurations. Advanced users can utilize these methods to pinpoint the root cause of persistent 4xx errors.Troubleshooting 4xx errors at a deeper level often involves investigating interactions with third-party services, API configurations, and server-side code.

A methodical approach to debugging, including analyzing logs and comparing tools, is crucial to resolve these issues effectively.

Debugging 4xx Errors in Specific Situations

Pinpointing the exact cause of a 4xx error can involve a multi-faceted approach. Careful examination of error messages and associated context is essential for determining the source of the problem. For instance, a 404 error might indicate a missing file or a misconfigured URL, while a 403 error often signifies insufficient permissions. Understanding the specific error code and accompanying details is key to targeted troubleshooting.

Third-Party Integrations and Their Impact

Third-party integrations can introduce complexities that contribute to 4xx errors. Misconfigurations in API keys, authentication mechanisms, or rate limits can all lead to these issues. Thorough review of third-party documentation and careful examination of the integration code are crucial steps in resolving such problems.

Identifying and Fixing API Errors or Misconfigurations

API errors frequently manifest as 4xx errors. Incorrect API calls, invalid parameters, or expired tokens can trigger these errors. Debugging involves verifying the correct syntax and parameters of API requests, ensuring proper authentication, and validating that the API is operational. A common approach is to use API testing tools to simulate various requests and observe the responses.

Comparing expected responses with actual responses will help isolate the cause of the error.

Investigating Issues Related to Web Frameworks or Programming Languages

Specific web frameworks or programming languages might have unique error handling mechanisms that influence the appearance of 4xx errors. For example, issues with routing or controller configurations within a framework can lead to unexpected 4xx errors. Carefully reviewing the framework-specific documentation and examining relevant code segments is vital for effective troubleshooting.

Analyzing Server-Side Logs for Complex Issues

Server-side logs are invaluable resources for diagnosing complex 4xx errors. They provide insights into the sequence of events leading to the error, including timestamps, request details, and server responses. Identifying patterns in log entries can help isolate the root cause of the error, particularly when dealing with errors that aren’t immediately obvious from the client-side.

Comparing Tools for Debugging Server-Side Issues

Different tools offer varying capabilities for debugging server-side issues. The choice of tool often depends on the specific needs and the context of the problem. The following table provides a comparison of common tools, highlighting their strengths and weaknesses.

| Tool | Strengths | Weaknesses |

|---|---|---|

| Web Developer Tools (Chrome DevTools, Firefox DevTools) | Excellent for client-side debugging, but also provide valuable insights into requests and responses, facilitating initial investigations. | Limited for in-depth server-side analysis. |

| Log Analyzers (e.g., Splunk, ELK stack) | Powerful for analyzing large volumes of server logs, identifying patterns and correlations, and providing comprehensive insights. | Requires setting up and configuring the log analyzer, which can be a significant time investment. |

| API Testing Tools (e.g., Postman, Insomnia) | Ideal for testing API requests, simulating different scenarios, and verifying expected responses, crucial for identifying issues in API interactions. | Might not offer deep insights into server-side error handling. |

Last Word

In conclusion, resolving Google Search Console’s “blocked due to other 4xx issues” requires a systematic approach. By understanding the different types of 4xx errors, meticulously checking server logs, reviewing your website’s structure, and implementing preventative measures, you can ensure your site remains indexed and ranked effectively. Remember, consistent monitoring and proactive maintenance are key to avoiding future problems.