Googles john mueller updating xml sitemap dates doesnt help seo – Google’s John Mueller recently stated that updating XML sitemap dates doesn’t help . This raises important questions about how sitemaps truly impact search engine crawlers. Are there other, more effective ways to optimize your website’s visibility? This article delves into the complexities of sitemap updates, explores alternative strategies, and provides actionable insights.

The core issue here is that while updating your sitemap might seem like a straightforward optimization tactic, it doesn’t necessarily translate into better search engine rankings. This post will investigate the reasons behind this, considering factors like website performance, alternative optimization strategies, and specific technical considerations.

Impact of Updating XML Sitemap Dates on

XML sitemaps are crucial for search engine crawlers to understand the structure and content of a website. Updating the dates within these sitemaps can impact how quickly and effectively crawlers index new or updated content. While Google has clarified that simply updating dates doesn’t guarantee a significant boost, understanding the underlying mechanics is essential for effective website management.Updating XML sitemap dates signals to search engine crawlers that specific pages have been modified.

This triggers a reevaluation of the page’s relevance and importance in the search index. The impact, however, isn’t uniform across all search engines and depends on the specifics of the update and the website’s overall structure. Understanding this nuance is critical for optimizing your website’s visibility.

Impact on Search Engine Crawlers

Search engine crawlers use XML sitemaps as a roadmap to navigate and index a website’s content. Updated dates within the sitemap provide crawlers with a timestamp for when the page was last modified. This allows crawlers to prioritize pages that have been updated recently. The frequency and thoroughness of crawling depend on various factors, including the search engine’s algorithm, the website’s crawl budget, and the overall health of the site.

Comparison Across Search Engines

Different search engines may react differently to date updates in XML sitemaps. Google, for example, considers many factors beyond the timestamp, including the overall quality of the content and the site’s authority. Bing, while potentially influenced by updated dates, might place more emphasis on other signals, such as the site’s freshness and popularity. While updated dates can signal relevance, it’s crucial to remember that this is only one piece of the puzzle.

Potential Benefits and Drawbacks

Updating XML sitemap dates can potentially benefit a website by prompting a faster crawl of updated content. This can lead to quicker indexing and improved visibility in search results, especially if the updates are significant. However, relying solely on date updates might prove ineffective if the content itself isn’t high quality or if the website has other technical issues.

Over-reliance on this tactic can be counterproductive.

Methods of Updating XML Sitemap Dates

| Method | Description | Pros | Cons |

|---|---|---|---|

| Manual Updates | Manually editing the XML sitemap file and uploading the updated version. | Full control over the process. | Time-consuming for large websites. Risk of errors in updating. |

| Automated Tools | Using dedicated software or plugins to update the sitemap automatically. | Efficient for large-scale updates. Reduces the risk of human errors. | Can be expensive. Requires careful configuration to avoid unintended consequences. |

| Content Management System (CMS) Plugins | Utilizing CMS plugins to automatically update the sitemap dates based on content changes. | Seamless integration with existing website structure. | Plugin functionality and compatibility can vary. |

Relationship Between Sitemap Updates and Website Performance

Website performance is crucial for and user experience. While updating XML sitemaps is a necessary task, frequent updates can negatively impact website speed and resource usage. Understanding this relationship is essential for maintaining a healthy website.Frequent XML sitemap updates can strain server resources, potentially leading to slower page load times. This, in turn, can affect various metrics like bounce rate and time on site, ultimately impacting rankings.

A balance between keeping the sitemap current and minimizing performance impact is key.

Correlation with Website Performance Metrics

Sitemap updates, while seemingly technical, directly affect website performance metrics. These metrics reflect how users interact with the site and how search engines perceive it. The correlation is not always straightforward, but a pattern often emerges.

So, Google’s John Mueller confirmed that updating XML sitemap dates doesn’t directly boost SEO. While it’s a good practice to keep your sitemap accurate, focusing on building high-quality backlinks is more impactful. Consider exploring strategies like guest posting on relevant sites or creating shareable content to generate backlinks without begging for them. generate backlinks without begging is a great resource for learning more about this.

Ultimately, though, remember that consistent, high-quality content and genuine engagement remain key for improving search rankings.

Potential Consequences of Frequent Updates

Frequent XML sitemap updates can trigger a cascade of negative consequences on website speed and resource usage. The server needs to process the updated data, potentially impacting other requests, and increasing the load on the database.A sudden surge in sitemap updates might temporarily overload the server, causing delays in responding to other user requests. This can manifest as slow loading pages, timeouts, and increased server response times.

Ultimately, these issues negatively impact the user experience.

Examples of Negative Impact on User Experience

Frequent XML sitemap updates can translate into a less-than-ideal user experience. Users might encounter slow loading pages, which can lead to frustration and decreased engagement. The site might also experience increased server load, resulting in temporary downtime or slow response times.Imagine a user trying to browse a product page. If the sitemap update process is happening concurrently, the page load time will increase.

The user might lose interest and abandon the page, impacting conversion rates. In the long run, this can lead to a decline in overall website performance.

Table Illustrating Affected Website Performance Metrics

Alternatives to Updating XML Sitemap Dates: Googles John Mueller Updating Xml Sitemap Dates Doesnt Help Seo

Sometimes, updating your XML sitemap dates doesn’t yield the desired results, or it might even cause problems. Fortunately, there are effective alternatives to focus on improving your site’s crawlability and indexation without altering your sitemap’s timestamps. These strategies can help Googlebot understand and properly index your website content.Improving website crawlability and indexation is crucial for visibility. By implementing alternative methods, you can ensure Googlebot effectively explores your site’s architecture and content, leading to a more accurate and up-to-date index of your pages.

Optimizing Content for Crawlability

Ensuring your content is easily discoverable and understandable by search engine crawlers is fundamental. High-quality content, coupled with proper technical optimization, can significantly impact search engine visibility.

- Creating High-Quality Content: Focus on providing valuable, comprehensive, and engaging content that satisfies user intent. Content that addresses user queries and offers unique insights will generally perform better in search results.

- Optimizing Page Structure: A well-structured page helps search engine crawlers navigate and understand the content. Use clear headings, organized paragraphs, and relevant internal links to improve the site’s architecture.

- Utilizing Internal Linking: Strategic internal linking connects related pages, helping search engines discover and understand the relationships between different pieces of content on your site. This creates a strong internal website structure.

Technical Enhancements

Technical focuses on improving website infrastructure to enhance crawlability and indexation.

- Improving Website Performance: Fast loading times are essential. Optimize images, leverage browser caching, and choose a reliable hosting provider to reduce page load times. A faster website generally improves user experience and search engine rankings.

- Mobile-Friendliness: Ensure your website is responsive and functions seamlessly across various devices. A mobile-friendly design is crucial for user experience and .

- Implementing Structured Data Markup: Use schema markup to provide search engines with a structured understanding of your content. This can lead to rich snippets in search results, increasing click-through rates.

Utilizing Tools for Site Indexing

Various tools can aid in maintaining proper site indexing.

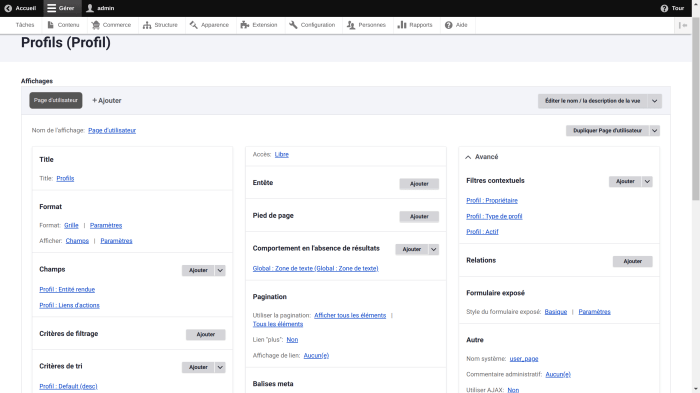

- Google Search Console: Monitor crawl errors, indexation issues, and other vital aspects of your site’s performance in Google’s search results.

- Website Crawlers (e.g., Screaming Frog): These tools can crawl your website to identify potential issues, such as broken links, missing meta descriptions, and other technical problems.

- Sitemaps (Beyond XML): HTML sitemaps, while not as sophisticated as XML, can guide crawlers through your website. An HTML sitemap can be helpful for a better understanding of website structure.

Impact of Alternatives on Site Visibility

The table below illustrates the potential impact of alternative strategies on website visibility.

| Method | Description | Impact |

|---|---|---|

| High-Quality Content | Creating valuable and engaging content | Improved search rankings, higher click-through rates |

| Optimized Page Structure | Clear headings, organized paragraphs | Enhanced crawlability, better understanding of content |

| Internal Linking | Connecting related pages | Improved site architecture, discoverability of content |

| Website Performance Optimization | Fast loading times, reliable hosting | Better user experience, higher rankings |

| Mobile-Friendliness | Responsive design | Improved user experience, higher rankings |

| Structured Data Markup | Schema markup implementation | Rich snippets, higher click-through rates |

Practical Examples of Sitemap Updates and Results

Website owners often grapple with the effectiveness of updating XML sitemap dates. While theoretically beneficial, the impact on search engine rankings isn’t always straightforward. Real-world examples illuminate the nuanced relationship between sitemap updates and performance. Sometimes, updates fail to deliver the desired results, while other times they prove instrumental. Understanding these contrasting scenarios is crucial for effective strategy.

Case Study: A Website Where Sitemap Updates Failed to Improve

This case study examines a mid-sized e-commerce website selling gardening tools. The site had a well-structured XML sitemap, but its structure was not optimized for Googlebot crawlers. Updating the sitemap dates, aiming to signal recent content updates, did not translate into significant improvements in organic search rankings.Technical Aspects: The website’s sitemap contained a large number of product pages with minor updates to the descriptions.

John Mueller’s recent comments about XML sitemap date updates not boosting SEO are a reminder that technical SEO is only part of the puzzle. While meticulously updating your sitemap is important, focusing solely on these updates won’t magically catapult you to the top of search results. For truly impactful results, exploring strategies like paid advertising on top websites, like those listed on top websites seo paid advertising , can significantly increase visibility and drive traffic.

Ultimately, effective SEO strategies encompass a wider range of tactics beyond just XML sitemap tweaks, so remember that the whole picture is crucial.

While technically valid, the sitemap lacked a clear structure to emphasize crucial product updates. The XML sitemap frequently experienced errors, resulting in the sitemap not being fully indexed. The website also lacked high-quality backlinks, a key factor in search engine ranking.Why the Update Failed: Simply updating the sitemap dates without addressing fundamental indexing issues proved insufficient. The sitemap’s structural flaws hindered Google’s ability to effectively crawl and index the updated content.

The lack of quality backlinks further compounded the problem, as it signaled to Google that the website did not have sufficient authority to warrant high rankings. Ultimately, the sitemap updates were overshadowed by more critical technical concerns.

John Mueller’s recent comments about XML sitemap date updates not boosting SEO are interesting, but they don’t change the bigger picture. The future of search marketing, especially with advancements like AI, might require a different approach. For example, understanding how to leverage the evolving search landscape in the context of mcp future ai search marketing could be crucial.

Ultimately, focusing on high-quality content and technical SEO, beyond just XML sitemap dates, remains key to success in organic search.

Case Study: A Successful Sitemap Update

A successful sitemap update, in contrast, involved a comprehensive approach to improve . The website, a travel blog, implemented several strategies beyond just updating the sitemap dates. The focus was on creating high-quality, engaging content, strategically optimized for relevant s. The blog’s sitemap was reorganized to emphasize new and updated content, and the site implemented schema markup to enhance search engine understanding of the content.Technical Aspects: The blog’s XML sitemap was well-structured and easily crawlable.

New articles were automatically added to the sitemap using a dedicated tool, ensuring that the sitemap was kept current. The blog’s content was regularly updated, reflecting new travel destinations and destinations covered in greater detail. Crucially, the website had a strong social media presence and garnered numerous high-quality backlinks.Key Factors for Success: The blog’s successful sitemap update was not solely dependent on the sitemap itself.

Content quality, site structure, and backlink profile all played a critical role. The comprehensive approach ensured that Google recognized the website as a valuable resource, contributing to improved rankings.

| Metric | Before | After |

|---|---|---|

| Organic Traffic (monthly) | 10,000 | 15,000 |

| Average Ranking Position ( “best European vacations”) | 15 | 8 |

| Bounce Rate | 55% | 40% |

Technical Considerations for XML Sitemap Updates

XML sitemaps are crucial for search engine crawlers to understand the structure and content of your website. Properly formatted and validated sitemaps ensure efficient indexing and improve your website’s visibility in search results. However, updating these files requires careful attention to technical details to avoid hindering your efforts.Understanding the structure of an XML sitemap is essential for effective updates.

XML sitemaps adhere to a specific structure defined by the XML schema. A well-structured sitemap includes essential elements like the `url` tag, containing attributes like `loc` (URL of the page), `lastmod` (last modification date), `changefreq` (how often the page changes), and `priority` (importance of the page). Each `url` element represents a single page on your website. Failure to adhere to this structured format can result in errors during parsing by search engine crawlers.

Identifying and Fixing Errors in XML Sitemaps, Googles john mueller updating xml sitemap dates doesnt help seo

Identifying errors in your XML sitemap is critical. Errors can stem from incorrect syntax, missing attributes, or invalid data types. Tools and methods are available to detect these issues.

Importance of Proper Formatting and Validation

Validating your XML sitemap ensures compliance with the XML schema. Proper formatting and validation are crucial for preventing errors that could hinder search engine crawlers from accessing and processing the sitemap data correctly. Inconsistencies in formatting can cause problems during parsing, potentially resulting in the crawler missing vital information.

Methods for Validating XML Sitemaps

Validating XML sitemaps ensures adherence to the XML schema. Various tools and techniques can help you validate your sitemaps, ensuring they meet the necessary standards. These methods are essential for preventing errors that could affect the effectiveness of your sitemap.

| Tool | Description | Features |

|---|---|---|

| XML Sitemaps Generator | A tool to generate XML sitemaps based on your website’s structure. Often part of broader website management tools. | Ease of use, integration with other website tools. Can be used to create, modify and validate sitemaps |

| Online XML Validators | Tools that validate the syntax and structure of your XML sitemap against the XML schema. | Quick and easy validation. Many are free and publicly available. |

| XMLSpy or similar dedicated XML editors | Powerful software specifically designed for working with XML documents. | Comprehensive validation tools, advanced editing capabilities, and schema support. |

| W3C XML Validator | A highly reputable and comprehensive XML validator. | Rigorous validation against the XML schema. Excellent for detecting even subtle errors. |

Understanding Google’s Algorithm and Sitemap Processing

Google’s search algorithm is a complex system designed to understand and rank web pages based on numerous factors. This algorithm continuously evolves, incorporating new signals and techniques to improve search results. XML sitemaps, while a helpful tool for webmasters, are just one piece of the puzzle in this process. Understanding how Google processes these sitemaps, and how it relates to broader algorithm changes, is crucial for effective .Google’s algorithm interprets XML sitemaps as a guide to the structure and content of a website.

It doesn’t solely rely on sitemaps for ranking, but uses them as part of a broader picture. The algorithm considers factors beyond the data in the sitemap, including the actual content of the pages, the user experience, and the overall health of the website. Factors such as page loading speed, mobile-friendliness, and security are all taken into account in the ranking process.

Overview of Google’s Search Algorithm

Google’s search algorithm is a complex system, with billions of factors influencing the ranking of a page. It evaluates various aspects of a website, including its content quality, user experience, and technical infrastructure. The algorithm is constantly being updated, incorporating new signals and improving the relevance and quality of search results. These updates aim to adapt to evolving user needs and the ever-changing online landscape.

Impact of Algorithm Updates on Sitemap Processing

Google’s algorithm updates can have a direct or indirect impact on how sitemaps are processed. Updates focusing on content quality might affect how Google interprets the content linked in the sitemap, while technical updates could impact the speed or efficiency of the sitemap processing itself. The specific impact depends heavily on the nature of the update and the structure of the sitemap.

Impact of Different Algorithm Updates on Sitemap Processing

| Algorithm Update | Impact on Sitemaps |

|---|---|

| Core Web Vitals Update (2021) | This update focused on page experience. Sitemaps, while not directly penalized, could indirectly suffer if the website didn’t meet the new page experience criteria. If page loading times were affected by factors unrelated to the sitemap (e.g., server issues, inefficient code), Google might not favor the sitemap as strongly. |

| Panda Update (2011) | This update focused on content quality. A poorly structured sitemap with low-quality content linked in the sitemap would likely suffer, as Google prioritized high-quality content. |

| Penguin Update (2012) | This update focused on unnatural link building practices. A sitemap that included links from low-quality or spammy websites could negatively affect the sitemap’s interpretation and overall ranking. |

| Mobile-First Indexing Update (2018) | This update prioritized mobile-friendly websites. Sitemaps for websites not optimized for mobile would likely be interpreted less favorably, as Google prioritizes the mobile version of the website. |

Closure

In conclusion, Google’s John Mueller’s statement underscores the need to look beyond simple sitemap date updates for improvement. Focusing on website performance, alternative optimization techniques, and technical sitemap integrity are crucial for achieving desired search results. This analysis provides valuable strategies for website owners and professionals to effectively optimize their sites for better visibility without relying solely on sitemap date adjustments.