The way Google scans is a fascinating process, delving into the intricate mechanisms behind how the world’s most popular search engine indexes and ranks web pages. This journey reveals the detailed crawling and indexing methods, highlighting the diverse data structures used to store and organize content. We’ll also explore the intricate ranking algorithms, considering factors like content quality, backlinks, and user experience.

Beyond the technical aspects, we’ll investigate the user experience (UX) factors, security measures, and privacy policies that shape the Google search experience.

From the initial crawl to the final search result, the way Google scans encompasses a complex interplay of technology and user needs. We’ll uncover the steps involved, from discovering web pages to presenting them in a ranked order, demonstrating how Google’s sophisticated algorithms work to deliver relevant results. This exploration will also delve into the security and privacy measures Google employs to protect user data and ensure a safe online environment.

Indexing Processes

Google’s search engine is a marvel of engineering, constantly evolving to keep pace with the ever-growing web. A crucial component of this engine is the meticulous indexing process, which determines how search results are presented to users. This process is a complex interplay of crawling, processing, and storage mechanisms, meticulously designed to provide relevant and timely information.The heart of Google’s search engine is its ability to understand and categorize the vast expanse of online content.

This understanding is achieved through a sophisticated process of crawling, indexing, and ranking. By analyzing the content, structure, and links of web pages, Google’s algorithms can identify the most relevant information for a given search query.

Google’s indexing process, a complex web of algorithms, is fascinating to observe. It’s not just about crawling pages; it’s about understanding the intricate connections between them. This deeper understanding is directly influenced by factors like the culture within the company, especially as seen in the work of Brian Chesky and Alfred Lin, whose insights into user experience shape how Google operates.

Brian Chesky and Alfred Lin’s culture emphasizes a user-centric approach that is then reflected in the way Google scans and prioritizes information. Ultimately, Google’s scan methodology aims to provide the most relevant and user-friendly results possible.

Crawling Mechanisms

Google’s web crawlers, often called “spiders,” are automated programs that traverse the web, following links from one page to another. This process is essential for discovering new content and updating existing information in the index. These crawlers don’t just visit every page; they prioritize pages based on factors such as their popularity, recency, and relevance to previously indexed content.

This prioritization is a key aspect of efficiency, preventing the crawler from becoming bogged down in less important content.

Indexing Methods

Google uses a multifaceted approach to categorize and organize web pages. Beyond simply copying the content, the process analyzes the structure of the page, including headings, meta tags, and internal links. This analysis allows Google to understand the context and meaning of the information presented. Crucially, Google also considers the links pointing to a particular page from other websites, as this signifies the page’s importance and authority within its subject area.

Data Structures for Indexed Content

Storing and organizing the vast amount of indexed data requires specialized data structures. Google employs complex algorithms and data structures to efficiently retrieve relevant information. This includes techniques like inverted indexes, which allow for rapid retrieval of pages containing specific words or phrases. These sophisticated data structures are fundamental to the speed and efficiency of Google’s search results.

Comparison with Other Search Engines

While other search engines utilize similar crawling and indexing techniques, Google often distinguishes itself through its advanced algorithms and data structures. The sheer scale of Google’s index, coupled with its sophisticated ranking algorithms, contributes to its ability to provide highly relevant results compared to competitors. Factors such as the quantity and quality of data collected, the sophistication of ranking algorithms, and the speed of retrieval are key differentiators.

Steps in Web Page Scanning

| Step | Description | Example | Method |

|---|---|---|---|

| Crawling | The Googlebot (crawler) follows hyperlinks on web pages, discovering new or updated content. | Following a link from Wikipedia to an article on the history of the internet. | Following links, using robots.txt |

| Indexing | The crawler extracts and analyzes content, including text, images, and other metadata, storing it in a vast database. | Analyzing the text, images, and metadata of an e-commerce product page. | Parsing HTML, extracting data, creating a document. |

Ranking Algorithms

Google’s search ranking algorithms are a complex web of factors, constantly evolving to provide the most relevant and helpful results to users. These algorithms aren’t static; they adapt and improve based on user behavior, search trends, and the ever-changing landscape of the web. Understanding these algorithms is crucial for anyone wanting to optimize their website for search engines and improve visibility.The fundamental principle behind Google’s ranking algorithms is to deliver search results that satisfy user intent.

This means going beyond simply matching s to finding pages that accurately answer the user’s query, providing valuable information, and offering a positive user experience. This approach prioritizes content quality, relevance, and user engagement over simply optimizing for s.

Factors Influencing Search Results

Google considers a multitude of factors when determining a page’s ranking. These factors interact and influence each other, creating a complex system that is constantly refined. Understanding the importance of each factor is vital for creating successful strategies.

- Content Quality: High-quality content is crucial. This means providing comprehensive, well-researched, and accurate information that satisfies the user’s query. Content should be original, well-written, and engaging. It should also be relevant to the specific search query.

- Backlinks: Backlinks from reputable websites act as endorsements for a page’s credibility and trustworthiness. The quality and quantity of backlinks are significant indicators of a page’s authority and relevance within a specific niche. Google analyzes the linking websites’ authority, content relevance, and other factors to assess the link’s value.

- User Experience (UX): A positive user experience is a significant ranking factor. This includes factors such as page loading speed, mobile-friendliness, ease of navigation, and overall design. A website that is easy to use and navigate, loads quickly, and is accessible on various devices will rank higher.

Evolution of Ranking Algorithms

Google’s ranking algorithms have undergone significant changes over time. Early algorithms focused primarily on matching, but modern algorithms are far more sophisticated. They now consider a broader range of factors, including user behavior, search intent, and context. This evolution reflects Google’s commitment to delivering increasingly relevant and user-friendly search results.

Interaction of Ranking Factors

The different ranking factors don’t operate in isolation. They interact and influence each other to determine a page’s position in search results. A page with excellent content but few backlinks might rank lower than a page with decent content but numerous high-quality backlinks.

| Ranking Factor | Description | Importance | Example |

|---|---|---|---|

| Content Quality | Well-researched, comprehensive, and original content directly addressing the user’s query. | High – Provides value to the user and demonstrates expertise. | A blog post meticulously explaining a complex technical topic, backed by data and cited sources. |

| Backlinks | Links from reputable and relevant websites acting as endorsements. | Medium-High – Demonstrates credibility and authority. | A link from a respected industry publication to a product review. |

| User Experience (UX) | Ease of navigation, page speed, mobile-friendliness, and visual appeal. | High – Impacts user engagement and satisfaction, directly influencing ranking. | A website with a clear structure, fast loading times, and responsive design. |

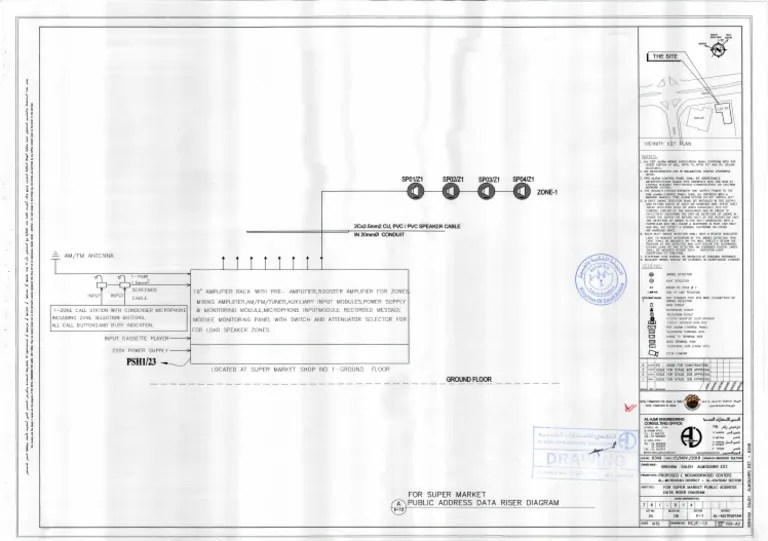

Technical Aspects of Scanning: The Way Google Scans

Google’s search engine is a complex system, and understanding its technical underpinnings is crucial for appreciating its power and scale. This section dives into the infrastructure that powers Google’s scanning processes, from the initial crawl to the final indexing. The sheer volume of data and the speed at which it’s processed are truly remarkable.The scanning process isn’t a simple matter of passively waiting for data; it requires a sophisticated, distributed system.

This involves a vast network of interconnected components, each playing a vital role in the overall function. This intricate system ensures that Google’s search results are accurate, comprehensive, and readily available.

Web Crawlers

Web crawlers, also known as spiders, are the automated agents that traverse the World Wide Web. They follow links from one webpage to another, discovering new content and updating existing information. This constant exploration is essential for maintaining a comprehensive index of the internet. Crawlers use sophisticated algorithms to prioritize websites, ensuring that frequently updated sites and those with significant content receive more attention.

Servers and Data Centers, The way google scans

Google’s servers, located in massive data centers worldwide, handle the immense volume of data generated during the scanning process. These data centers house clusters of servers that process requests, store information, and facilitate the interactions between crawlers, databases, and other components. The distributed nature of these servers allows for fault tolerance and high availability. The placement of data centers in strategic locations minimizes latency for users globally.

Architecture of Google’s Search Infrastructure

Google’s search infrastructure is a complex, layered system. At the core, there are distributed databases that store the collected information. This data is then processed by sophisticated algorithms that determine relevance and rank results. The architecture is designed to handle enormous volumes of data with minimal latency, ensuring quick response times for user queries. Scalability is a key design principle, allowing the system to adapt to growth and changing user demands.

Google’s data-scanning methods are fascinating, but it’s also interesting to see how other companies use their data. For instance, Uber’s approach to leveraging user data is quite revealing. How Uber uses data demonstrates the sheer volume of information collected and how it’s utilized, which in turn provides insight into the sheer breadth of data Google may be collecting for its own scanning purposes.

Ultimately, understanding these methods can help us appreciate the power and potential of data analysis in different contexts, even if it’s just for Google’s data-scanning practices.

Flow of Data During Scanning

(A diagram illustrating the flow of data during the scanning process, depicting the interaction between crawlers, servers, data centers, and databases. The diagram would show crawlers discovering new URLs, servers processing requests, data being stored in distributed databases, and the retrieval of data for search results.)

Google’s search algorithm is a complex beast, constantly evolving to provide the most relevant results. It scans web pages in a way that’s not entirely public knowledge, but it’s fascinating to consider the intricate process. If you’re interested in learning more about the process of sharing content, or want to re share something, you’ll find some valuable insights on the topic of content sharing want to re share.

Understanding the nuances of how Google scans information is crucial for optimizing your website’s visibility.

Types of Data Collected During Scanning

| Data Type | Description | Source | Use |

|---|---|---|---|

| URL | A Uniform Resource Locator, identifying a specific resource on the web. | Webpages | Used to identify and locate webpages for indexing. |

| HTML content | HyperText Markup Language, the code that structures webpages. | Webpages | Extracted to understand the content and structure of the webpage. |

| Text | The actual words and phrases on the webpage. | Webpages | Used for indexing and determining relevance to user queries. |

| Images | Visual content on the webpage. | Webpages | Can be indexed for image searches and aid in understanding webpage content. |

| Metadata | Data about the webpage, such as author, title, description. | Webpages | Used to enhance indexing and provide context for search results. |

User Experience and Scanning

Google’s search algorithm isn’t just about finding relevant s; it’s about delivering a positive user experience. This crucial aspect is woven into every stage of the scanning process, from initial crawling to final ranking. Understanding how user experience factors influence search results is vital for website owners seeking to improve their visibility and attract more users.Google meticulously considers how users interact with websites to ensure search results are not only accurate but also provide a seamless and satisfying experience.

This involves a complex interplay of website usability, accessibility, and user behavior patterns, all analyzed and incorporated into the ranking process. The ultimate goal is to present users with the most relevant and user-friendly content possible.

User Interactions and Website Usability

User interactions are a direct reflection of a website’s effectiveness and appeal. Google analyzes various user actions, including click-through rates, bounce rates, time spent on pages, and the depth of exploration within a website. These metrics provide valuable insights into how users engage with the content and structure. Websites that are easy to navigate, with clear and concise information, tend to have higher user engagement, which is a strong signal for Google’s ranking algorithms.

A website with intuitive navigation and well-organized content fosters a positive user experience, directly impacting search rankings.

Accessibility and Inclusivity

Websites that prioritize accessibility are highly valued by Google. Sites designed with features like clear text, proper use of alt tags for images, and adherence to web standards are more likely to be considered for higher rankings. Google’s scanning mechanisms evaluate the website’s compliance with accessibility guidelines, ensuring that users with diverse needs can access and interact with the site’s content effectively.

Websites that fail to meet accessibility standards may face lower rankings, as Google prioritizes providing a user experience that is inclusive and accommodating for all users.

Adaptation to User Behavior Patterns

Google’s scanning mechanisms are not static; they adapt to evolving user behavior patterns. As user preferences and search habits change, Google’s algorithms adjust to reflect these shifts. For instance, a sudden increase in searches for a specific topic may indicate emerging trends or user interest. Google’s scanning mechanisms can adapt to these real-time changes, providing users with the most up-to-date and relevant search results.

This dynamic nature of Google’s approach ensures that search results remain aligned with current user needs and interests.

UX Metrics Tracked During Scanning

The following table summarizes some key UX metrics Google tracks during the scanning process:

| UX Metric | Description | Impact on Ranking |

|---|---|---|

| Page Load Time | The time it takes for a webpage to fully load. | Faster load times generally correlate with higher rankings, as they improve user experience. Slow loading pages lead to higher bounce rates, impacting rankings negatively. |

| Click-Through Rate (CTR) | The percentage of users who click on a search result. | High CTR suggests the search result is relevant and attractive to users, potentially boosting rankings. |

| Bounce Rate | The percentage of users who leave a website after viewing only one page. | High bounce rates often indicate that the website is not meeting user expectations or is difficult to navigate. This negatively impacts ranking. |

| Time on Page | The average time users spend on a specific webpage. | Longer time on page indicates that the content is engaging and relevant to the user’s search query, potentially boosting ranking. |

| Mobile Friendliness | How well a website adapts to different screen sizes and mobile devices. | Mobile-friendly websites are crucial for a positive user experience. Google prioritizes mobile-friendly websites in its ranking algorithm. |

Security and Privacy

Google’s search engine is a massive undertaking, processing billions of queries and crawling trillions of pages daily. Protecting the integrity of this process and safeguarding user data is paramount. This section delves into the security measures Google employs to protect its scanning processes from malicious activity and the privacy policies surrounding its scanning procedures.Google prioritizes robust security protocols to maintain the integrity of its search index and prevent manipulation.

This commitment extends to safeguarding user data during the entire scanning process, ensuring a safe and reliable search experience for everyone.

Security Measures for Scanning Processes

Google implements a multi-layered security approach to protect its scanning infrastructure from malicious attacks. This includes robust firewalls, intrusion detection systems, and continuous monitoring to identify and mitigate threats in real-time. Advanced algorithms are employed to distinguish legitimate user requests from potentially harmful activity, such as attempts to inject malicious code or manipulate search results.

Privacy Policies Associated with Scanning Procedures

Google’s privacy policies are comprehensive and clearly Artikel how user data is handled during the scanning process. These policies are designed to respect user privacy and ensure data is used only for the purpose of improving search results and user experience. Transparency is a key aspect of these policies, informing users about the types of data collected, how it is used, and their rights regarding data access and control.

Data Security and User Privacy During Scanning

Google employs encryption protocols throughout the scanning process to protect sensitive information. Data is stored in secure, controlled environments, and access is limited to authorized personnel. Regular security audits and penetration testing are conducted to identify and address vulnerabilities.

Handling User Data Collected During Scanning

Google handles user data collected during the scanning process according to its comprehensive privacy policies. This includes anonymization of user-specific data whenever possible to protect individual identities. Data is aggregated and analyzed to improve search algorithms and the overall user experience. Collected data is not shared with third parties without explicit user consent, and data retention policies are strictly adhered to.

User data is subject to strict data minimization principles, collecting only the necessary information for indexing and search relevance.

Outcome Summary

In conclusion, the way Google scans is a testament to the power of intricate algorithms and vast technical infrastructure. From the crawling process to the final ranking, Google employs a multi-faceted approach that considers various factors. Understanding this process allows us to appreciate the complexity and efficiency of search engines and the importance of user experience in the digital age.

Ultimately, this process helps us navigate the vast ocean of information online.